This article explores the report filtering using Excel Slicer and SQL Server Reporting Services.

Read more »

This article explores the report filtering using Excel Slicer and SQL Server Reporting Services.

Read more »

SQL Server Integration Services (SSIS) and PowerShell (PS) together offer a plethora of opportunities, and some shortcuts when having to import, export, or at times moving data. I have come across packages that contain a Script Task with lines and lines of C# code that, done with PowerShell, could make maintaining that package much easier. Overall, the most common thing I see Script Task doing is accessing the file system or doing some manipulation on a file. One thing I hope that picks up speed in the BI world of SSIS is utilizing PowerShell for these type of actions. This is not to say one is better than the other as you should pick what is best in your eyes, but when I can do operations against the file system with a one-liner in PowerShell it is just easier to maintain that in the package. In this article I will go over how you can use the most common task utilized for executing PowerShell code in an SSIS package: Execute Process Task.

Read more »

Power BI is an extremely powerful tool to create dashboards and reports. In the last article, we learned how to create a Data Warehouse in Azure. In this new chapter, we will learn how to create reports from the Data Warehouse in Azure using Power BI in Azure.

Read more »

In the world of SSIS development architecture, preference should be given to extracting data from flat files instead of non-Microsoft relational databases. This is because you often don’t have to worry about driver support and compatibility issues in your SSIS development/server machine that is often attributed to non-Microsoft database vendors. In fact, I’ve been in several situations whereby we cannot upgrade to another version of SSIS (i.e. BIDS to SSDT) due to the lack of external vendor driver compatibility issues in the newer versions of SSIS.

Read more »

In previous chapters, we taught how to create SQL Databases in Azure. In this new chapter, we will show you the new SQL Data Warehouse Service in Azure.

Read more »

In the article, Multiple Options to Transposing Rows into Columns, I covered various options available in SQL Server to rotating a given row into columns. One of the options included the use of a PIVOT relational operator. The mandatory requirement of the operator is that you must supply the aggregate function with only a numeric data type. Such a mandatory requirement is usually not an issue as most aggregations and subsequent pivoting is performed against fields of numeric data type. However, sometimes the nature of business reporting requests may be such that you are required to cater for pivoting against non-numeric data types. In this article we take a look at how you can deal with such requirements by introducing a workaround to pivoting on non-numeric fields.

Read more »

Introduction

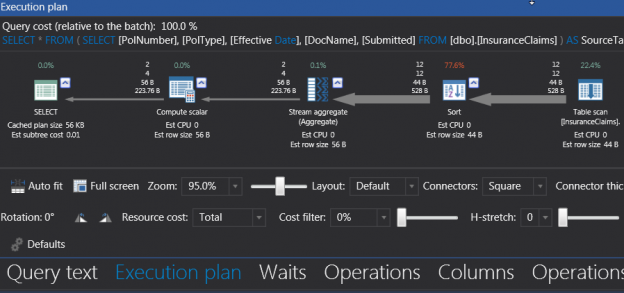

One of the primary functions of a Business Intelligence team is to enable business users with an understanding of data created and stored by business systems. Understanding the data should give business users an insight into how the business is performing. A typical understanding of data within an insurance industry could relate to measuring the number of claims received vs successfully processed claims. Such data could be stored in source system as per the layout in Table 1:

Read more »

There are great Microsoft tools to access to SQL Server in Azure. However, as a Database Developers and Administrators we need to help the end user to access to the information using a familiar and intuitive interface.

Most of the end users have a good knowledge in MS Excel. That is why it is recommended to connect our SQL Azure database to Excel. That way, the end user will be able to easily create reports, charts and generate useful information.

In this chapter, we will show how to connect to SQL Azure using Microsoft Excel.

Read more »

In a past chat back in January 2015, we started looking at the fantastic suite of data mining tools that Microsoft has to offer. At that time, we discussed the concept of a data mining model, creating the model, testing the data and running an ad-hoc DMX query. For those folks that may have missed this article, the link may be found immediately below;

Read more »

A few days ago I received an interesting challenge from one of our clients. The lady was attempting to estimate her potential monthly revenue recognition for the fiscal year beginning January 1, 2015, through December 31, 2015. The lady named Linda sells goods and services (each class yielding differing sales margins).

In the first portion of this two-part discussion, we shall be looking at the revenue projections for goods.

Read more »

A few days ago I received an email from a gentleman in the healthcare industry. He had read an article that I had previously published a few years back on a well known SQL Server website. Based on the original article, he requested a few ideas on how to expand the sample code to do a rolling three-month revenue summary, in addition to a rolling three-month revenue average.

In today’s get together, we shall be looking at doing just this!

Read more »

A few days back I was looking at ways to access raw data from within Microsoft Dynamics CRM in an effort to extract the raw data and to place it in our data warehouse. I started to explore utilizing OData and SSIS to pull the necessary data from the cloud to our local warehouse.

Whilst there are known authentication issues between Dynamics CRM and the Microsoft OData SSIS data source (and thus we could not utilize this access method), I thought it to be so very powerful, that I began looking for other constructive manners in which to utilize the OData Source.

Read more »

Have you ever felt like pulling your hair out, trying to ascertain exactly which fields in your existing Reporting Services datasets are being utilized by your reports. This happened to me recently during a corporate conversion and cleanup exercise for a database migration to the cloud.

The “aha moment” came after having presented a paper at the PASS SQL Server Nordic Rally (March 2015), when one attendee came up to me and asked if I knew of a method to do this. As they say ‘necessity is the mother of invention’ and spiking my interest, I played around until I came up with the solution that we are going to chat about today. The end solution may be seen below

Read more »

Oft times we are forced into situations where we must clearly think outside of the box. In today’s “get together”, we are going to discuss a challenge that I encountered during the last week of February of this year. The client had been charting weekly business calls placed by his sales reps. Our client had been tracking these results within an Excel spreadsheet (see the screen dump below) and he would be using this spreadsheet to report the sales reps progress going forward. My task was to source this data for the corporate reports in Reporting Services, from this spreadsheet and do so on a weekly basis. The client, being resistant to change, was not willing to change the format of the spreadsheet to something more conducive to be utilized by the chart that he wished to produce (see immediately below).

Read more »

Introduction

As you will remember from our last “get together” we created an application that permitted us to report upon financial data based upon an unorthodox financial year. In fact, our fiscal year started in July and ended in June. We created a chart to display the data.

In today’s “get together” we are going to push our application a bit further and build in a subreport which will bring up the underlying data when the end user clicks upon the chart for any particular month. Thus should the user click on February 2015, then all of February’s data (for the selected funds) is shown in a matrix. If the user chooses March, then March’s data is shown.

Read more »

I recently heard from a lady from overseas who wanted to find a quick and dirty mechanism of extracting data for a given date range (based upon a fiscal year that started July 1st and ended June 30th). The idea interested me and as always, I had to try it out.

In today’s “get together”, we are going to have a look at how this may be achieved.

Read more »

In our last two chats, we discussed enterprises that have had financial years that began in July and ended at the end of June. One of our clients works with this fiscal calendar and their financial folks are Excel “Fundi’s” (Fundisa is a Nguni word for “expert”). Many of their reports contain the current month’s sales, in addition, carrying running totals from the beginning of the fiscal year to date. Read more »

Oft times we are forced into situations where we clearly need to think outside of the box. A case at hand arose early in 2014 where one of our client’s required a “quick and dirty” front end to modify data within a table that reflected the outstanding balances (of their clients) and the attempts that they had made to recover these funds. Master Data Services seemed to be the way to go!

Read more »

A few months back, I presented a paper at SQL Saturday 327 in Johannesburg, South Africa. Late last month I received an email from one of the attendees. His issue was quite interesting and I decided to share it with you. The gentleman wanted a SSIS script that would permit him to extract data from a SQL Server database table and place it in a CSV file with a dynamically allocated name. Being a strong advocate of using the SSIS toolbox, I experimented with an alternative solution. We are going to construct THIS SOLUTION in today’s get together.

Let’s get started.

Read more »

In past chats, we have had a look at a myriad of different business intelligence techniques that one can utilize to turn data into information. In today’s “get together” we are going to try to pull all these techniques together, rationalize our development plans, and moreover, look at some good habits to adopt or for the want of better words utilize SQL Server Reporting Services Best Practices.

Read more »

In the previous posts

Deployment to several databases using SQL Server Data Tools and TFS using a Custom Workflow file

Deployment to several databases using SQL Server Data Tools and Team foundation Server

Continuous integration with SQL Server Data Tools and Team Foundation Server

I have been mostly writing about the interaction between SQL Server Data Tools and Team Foundation Server. Microsoft provides a hosted version of Team Foundation Build Service called Visual Studio Online. The configuration and functionality is mostly the same than what I have previously been writing about but there are some specifics things that we need to be aware when using Visual Studio Online Build Service.

Read more »

In past chats, we have had a look at a myriad of different Business Intelligence techniques that one can utilize to turn data into information. In today’s get together we are going to have a look at a technique dear to my heart and often overlooked. We are going to be looking at data mining with SQL Server, from soup to nuts.

Read more »

In the previous blog post : Deployment to several databases using SQL Server Data Tools and Team foundation Server I illustrated how it is possible to use TFS and a batch file to deploy a database to several SQL Server instances or to deploy several SQL Server databases to several instances. The main way to achieve that in the previous post was using a batch file. For more information about this technique please have a look at that blog post.

In this post on the other hand I will demonstrate how the same functionality can be achieved using a Windows Workflow Foundation file (xaml) deployment file and Team Foundation Server.

Read more »

In an earlier “get together”, we had a quick look at the DAX language and how to construct useful queries. In today’s conversation we shall be concentrating on utilizing the knowledge that we obtained from the earlier article and seeing how these queries may be utilized for “multiple value” query selection criteria (against a tabular database).

Enough said, let us get started!

A few years back, a client asked me to implement a quick and dirty “security mechanism” to control what data the myriad of users were able to view within their reports. There were numerous tables with multiple columns and all departments (within the enterprise) had their data within these tables.

SQLShack Industries has tasked us with creating a similar quick and dirty “security mechanism”. We shall attack this challenge by creating the necessary stored procedures (to extract the required data) and then utilize these stored procedures to render and consume the data within our reports.

Read more »© Quest Software Inc. ALL RIGHTS RESERVED. | GDPR | Terms of Use | Privacy