Introduction

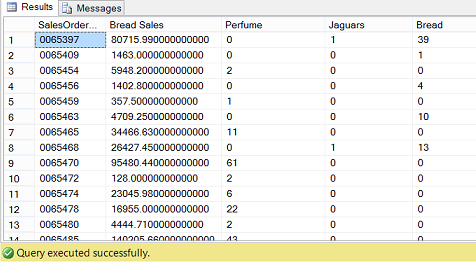

Late in October, I received an unusual request from the head of sales within one of my client sites. Sales sells three articles: bread, perfume, and Jaguar motor cars. Now the reader will note that one of these items is a staple and the other two are for those folks with considerable disposable income. Management within the firm had increased the salesmen’s bonuses for those folks that managed to sell perfume and/or Jaguars along with the standard loaves of bread. The summary report may be seen below showing the final bonus rate for each sales order booked during the month.

Read more »