We’ve looked at both the organization and development side of managing Azure costs. One risk we have is attackers who compromise an account and mis-scale resources (such as scaling up), driving up our costs. Another scenario is attackers scaling resources too low that affects client’s ability to do their work (performance problems) – a separate risk that may result in lower costs on the cloud side, but higher costs against our reputation. A third risk is reconnaissance of our Azure use: this allows the attackers to get information about our design and later make a wide range of attacks that will appear as normal to us – in this case, Azure costs may be only one of the impacts with other impacts being as severe.

With the cloud, we still must follow strict security principles to reduce the risk of these types of attacks. We’ll look at following security principles that combine least permissions along with appropriate auditing that will help us prevent and detect compromises that may add to our costs.

Least Permissions

To reduce the likelihood that one account is compromised and any auditing for Azure costs is disabled, accounts with access to our Azure assets should follow the least permissions principal. If a user only needs read for validation purposes, we should not grant a role that could possibly compromise our design – such as an attacker compromising an account and increasing the scale of our resources. In the below image, we see an addition of a Reader role called DBDevs (database developers) for our development database. In this example, the account that would create changes would have higher permissions, but would be an automated user, while the database development team would have read access for validation and would build in a local environment.

For reducing the likelihood of an attacker increasing Azure costs, we use least permissions with our database development team.

This design may work for our teams, but it’s possible that we need a shared development environment in Azure, so we’d still need to give them higher permissions in the shared environment. Assigning permissions depends on our needs and there may be a use case where we want to give a team higher permission. For these cases, we want to consider how we audit such scenarios.

Auditing Access

Where least permissions combine with audits is the warning sign that an account, multiple accounts, or something else has been disabled or is misfunctioning. Monitoring for Azure costs is one technique and we can also monitor the users who have access, their access level, and if users have been added or removed. This can be reflected in some of the following scenarios:

- An attacker may compromise an account with significant permissions and disable all the accounts except the significant permissions account he’s using – but if one of those accounts is an audit account that becomes disabled, we may know through missing audits

- An attacker may compromise an account with significant permissions and create a new account for access that appears to be a normal part of our organization structure. However, we can audit for account access and detect and review when new accounts appear

- An attacker may compromise an account with significant permissions and conduct a man-in-the-middle attack where we receive audit information that is false, while the attack is causing damage (ie: our Azure costs are rising), but with audit information misleading us, we believe that we’re fine

- An attacker may compromise an account with significant permissions and disable all accounts, except the monitoring account (or monitoring accounts). Likewise, the attacker may disable some accounts with less frequent access relative to the amount of prior reconnaissance

- Finally, an attacker may simply compromise an account with significant permissions and attack. Depending on what is wanted (such as information or causing damage through higher Azure costs), this may be more efficient even if caught quickly

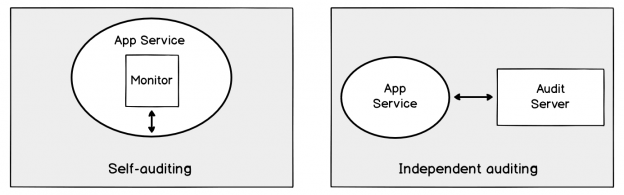

This doesn’t only provide us with information, it may also provide us with information if we’re not receiving information (missing audits, if the attacker disables monitoring accounts). In the case of the man-in-the-middle attack where the attacker both attacks and reports false information (Stuxnet-like), we can mitigate this by using independent audit resources – an App Service that self-reports with a user can be compromised in this manner with enough reconnaissance, whereas an independent server that performs a check must also be compromised too in order to report false information (increasing the points of attack for the attacker). In the below image we see the technique compared – on the left we see a design where a script on an app service self-audits Azure costs whereas on the right, we see an audit server that audits the App Service independently – the attacker must now compromise the audit server to poison our information.

Compare auditing Azure costs using self-auditng on the same App Service versus an independent server auditing.

The idea behind the above design is that increased effort of an attack may dissuade an attacker relative to the reward of the attack – two points of an attack, especially when it required poisoning the data from one-point increases the effort. The effort to compromise one app service auditing users for protecting against potential attacks on Azure costs is less than an independent audit of this same information.

In the below example PowerShell script, we perform an audit using the AzureRm PowerShell library by looking at the names, roles, types and their ability to delegate of our users. In the below script we output the details, but for auditing purposes, we may want to save these details to a file, table, or wrap it in logic that checks if conditions are true.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

$sub = "OurSubscriptionName" $grp = "OurResourceGroup" $srv = "hlthserver" $type = "Microsoft.Sql/servers" Login-AzureRmAccount Select-AzureRmSubscription -SubscriptionName $sub $details = Get-AzureRmRoleAssignment -ResourceGroupName $grp -ResourceName $srv -ResourceType $type foreach ($detail in $details) { Write-Host ( "Name: " + $detail.DisplayName + ([Environment]::NewLine) + "Definition: " + $detail.RoleDefinitionName + ([Environment]::NewLine) + "Type: " + $detail.ObjectType + ([Environment]::NewLine) + "Delegate: " + $detail.CanDelegate + ([Environment]::NewLine) + ([Environment]::NewLine) ) } |

Example output of an audit where we get required information to ensure no unauthorized user can affect Azure costs (details are covered in this output).

How we audit will depend on how we design access – ultimately our findings should always confirm our design of least permissions. The above example would provide us with information on the user and access level along with whether these users can delegate, and we would adjust it if we wanted to audit other detailed information. As for specific accounts being compromised, auditing for credential protection may require other forms of testing.

Security Testing for Credential Protection

In some cases, we have to acknowledge that a technical solution may not be sufficient for audits. Unfortunately, employees can have their credentials compromised through a variety of attacks, one being social engineering attacks, such as phishing, sim-swapping, etc. These attacks can be the entry point at which attackers can affect our Azure costs or other compromises through mimicking the permissions of the compromised account, which will appear to us as a legitimate user. Even if we follow strict permissions, there may be accounts that will need more permissions and these will always be a target for attackers.

While it may be less technical, training employees against these social engineering attacks is one way to prevent these attacks. The trouble with technically skilled employees can be sometimes they overlook basic social engineering techniques (especially if the social engineering takes place in a physical environment). In the same manner, we may want to test employees by simulating scenarios that attackers will use – a security team intentionally sending phishing emails to identify who clicks through the email and exposes the company to risk. We should not underestimate the risk that social engineering can cost and as we’ve seen with many compromises recently, these attacks are increasing in frequency and sophistication.

Whether the attackers want to affect our Azure costs, cause damage to our clients, or cause damage in other ways, attackers will always have an advantage over defensive systems because they only need to find one weakness – from our design to our employees. If we can identify this weakness before the attackers do, we remove an attack vector. This means we have to look at the entire range of attacks and test against the ones that may be outside development, such as social engineering.

Conclusion

The cloud offers a convenient way to develop, test ideas, and possibly create architecture that we’ll use for our business at reduced cost. We may still face Azure cost risks alongside security, as attackers may compromise accounts and scale resources that increase our costs, or affect our clients with poor performance (ultimately hurting us). We should follow the least permissions principal by ensuring that all users have the least amount of access they need. In addition, we should monitor for users and keep track of all auditing – any missing audits may be a sign of an attack and the faster we identify it, the faster we can resolve

Table of contents

- Data Masking or Altering Behavioral Information - June 26, 2020

- Security Testing with extreme data volume ranges - June 19, 2020

- SQL Server performance tuning – RESOURCE_SEMAPHORE waits - June 16, 2020