Having things in the cloud should make life simpler but I have experienced it’s not that straightforward. Once all access / configuration is sorted out then yes, of course! But in the meantime it can be tedious (even frustrating) and the end result is something that could have been achieved with a different method.

I think a significant chunk of what Azure offers is easier to do with the more conventional methods but that separates us from the advanced Azure features that a company would like to utilize in the shorter / longer term future. So there are reasons to spend some time / effort in getting things right up there.

In my case the task I needed to accomplish sounded simple enough:

- get a SQL Server database backup (.bak file) from Azure blob storage

- copy it to our own environment and restore it to a SQL Server instance on an Azure Windows VM

- or skip the copy step and restore straight from blob storage

Concepts

Names and concepts in Azure can be very different from on premise nomenclature so my advice is to always check the actual, up-to-date (fingers crossed) documentation that’s available on the official Microsoft websites to make sure your understanding is aligned with theirs. It’s not a question of common sense or being smart, just be aware that every nuance can make a difference!

(I will simplify things here as your true source of information should be the official documentation so I am very conveniently allowing myself to be somewhat casual ;-))

Storage account

There is (can be) a difference between a storage account and a blob storage account! Though you can hear people talking about them as actual synonyms. A little childhood-like way to remember it: every blob storage account is a storage account but not all storage account is a blob storage account.

As I think of it, the name actually shows that blob storage account is a sub-concept of storage account.

But what actually a storage account is? It is basically a general digital space that you can store (what a surprise!) your data. There are four different types of storage at the same time (i.e. the same storage account can have all or any combination of these):

- blob container – for large, unstructured data

- file shares – it is like an online external hard drive

- queues – large number of small messages in a queue

- tables – large amount of structured data in a NoSQL database

In the case of general storage account, Azure Storage Explorer shows it as External. There is a way to provision a storage account straight as blob:

I “hear” the questions being formed in your heads: what the heck could this v1 and v2 two be? Despite the similarities this is not the long-range German rocket from WW II!

As a rule of thumb use V2 if the price is inside your budget. V1 and blob are becoming a legacy feature.

Blob

“Blobs are basically files like those that you store on your computer” – says Microsoft. They say a lot more in a simple way, so head there for additional details!

So blob is not the container or the storage or something else, blob is the file itself! I heard different people having a different understanding about this but when it comes to coding you have to know how all this is structured. What a project manager can get away with during a meeting, the scripting environment won’t let you get off the hook that easily!

Structure

How to reference a blob? Since it is an online object you need a URL that points to it and a key that gives you access. That URL is put together of the following 3 parts:

- storage account

- container name

- blob (file) name

The URL always starts with https and after the name of the storage account (which is custom given by the person provisioning the service) it always has the .blob.core.windows.net suffix. Please find below an example:

Interlude #1

Let me describe my journey (isn’t this on overused word nowadays?) as I went through the steps:

Connecting to Azure storage account

It sounds simple, isn’t it? And it is … it should have been… if the storage account was originally set up correctly. In my case it didn’t happen and since a 3rd party was responsible for setting it up I was only given a URL and a key, nothing else.

I tried connecting various ways, but I couldn’t make it work. Yet it gave me a good lesson to explore these different ways:

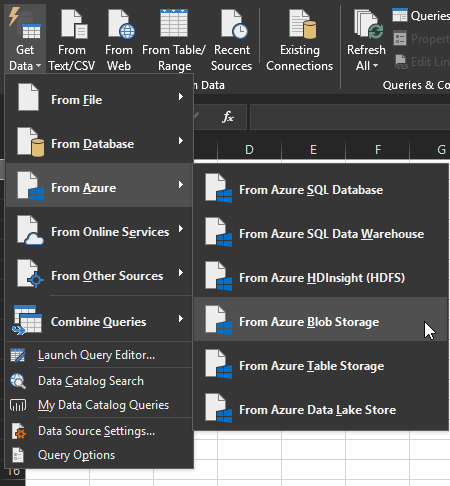

PowerBI / Excel – I put them as one option as behind the scenes it is the same

Almost the same using Power BI (I think behind the scenes it can actually be the same with a different visual theme):

Microsoft Azure Storage Explorer

This is a handy tool provided by Microsoft for free. It doesn’t do much but does that quite effectively. You can download it.

Provide the URL and the key (of course when a proper key is entered the red warning message disappears – or should disappear)

If everything is configured correctly, after clicking next you should have access to the storage:

Note the External next to the obfuscated storage account name! See details in the Concept at the beginning!

This utility is a file manager at the same time, not just a simple explorer:

AzCopy

This is a command line executable also provided by Microsoft. It needs to be installed first, the official documentation you can Bing it (if I want to be politically correct…), Google it (if I want to conform to the minority, which I don’t) or – as I prefer – duck it (using Duck Duck Go). Or just simply click this link

It is a very handy tool and nicely documented plus a lot of info is available about it online. A very simple sample (for a tongue-twister say this simple sample 3/6/x times) is like that:

AzCopy /Dest:path_for_local_file /Source:path_for_blob /SourceKey:key_provided_for_storage_account

For details about path_for_blob scroll up to just before the first interlude!

PowerShell

PowerShell is great! I think on the long run it is superior to AzCopy due its versatility as a scripting engine! Setting up all the modules and cmdlets can be a pain but once that is done and you’ve figured out how to manipulate these Azure objects in a few hours (haha), it works like a charm! Jokes aside it is very worthwhile to be familiar with PowerShell scripting when it comes to Azure!!!

So here is a little script that downloads a file (blob) from an Azure blob storage using parameters. The important line is the very last one.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

#source-related parameters $SourceStorageAccount = "the url without the https:// part" $SourceStorageKey = "the key" $SourceStorageContainer = 'container name' $SourceStorageContext = New-AzureStorageContext –StorageAccountName $SourceStorageAccount -StorageAccountKey $SourceStorageKey $SourceFileName = "name of source file.ext" #destination parameters $DestinationFolder = "path to local folder" $DestinationSuffix = $DestinationSuffix = Get-Date -Format "yyyymmdd_HHMM" $DestinationFileName = $SourceFileName.Replace(".bak", "_" + $DestinationSuffix + ".bak") #list contents #Get-AzureStorageContainer -Context $SourceStorageContext -Name * | Get-AzureStorageBlob #Get-AzureStorageBlob -Container $SourceStorageContainer -Context $SourceStorageContext | Select Name #download files / objects Get-AzureStorageBlobContent -blob $SourceFileName -container $SourceStorageContainer -destination $DestinationFolder -context $SourceStorageContext |

I used RobinDotNet’s very clear and easy-to-understand (and working!!!) blog entry.

His blog has a wealth of Azure-knowledge a good starting point for PowerShell operations in the Microsoft cloud.

This other entry also helped me quite a lot

The official documentation about the different Azure PS commands can be very handy, it’s worth a bookmarking. Specifically about Get-AzureStorageBlobContent

Interlude #2

So we have figured out how to get access to the blob (the file!) and download it to a local folder. The next step is restoring the .bak database backup. If to be done from that local file, my post would end here. But there is a way to restore directly from inside the storage account blob container without downloading the file first.

Is that a straightforward and easy-to-use option? In theory: yes. In practice? Read on!

Restore database directly from blob

First I thought there is a way SSMS can be used for that but I couldn’t make it work. It required quite a complicated setup … and my experiment ended with error messages. So instead let’s follow the T-SQL way!

Page Blob (no blocks please, this is not LEGO)

First of all there are three different types of blobs: block blobs, page blobs, append blobs. SQL Server only supports restore from URL if the blob’s type is page blob not block. Here’s the snippet from the official documentation:

If you choose to copy and upload a backup file to the Windows Azure Blob storage service, use page blob as your storage option. Restores from Block Blobs are not supported. RESTORE from a block blob type fails with an error.

Of course the blob I was granted access to was in Block Blob format… luckily there is an easy fix for that: AzCopy has a switch that is nice and easy for this very case

|

1 |

/BlobType:page |

So once your blob has the correct type (it doesn’t affect the actual content of the file only how it is stored) you can go on with the actual restore!

Restore from URL

Steps to follow

- create credential that uses the Shared Access Signature

- another credential to authenticate to the blob storage container

- restore the database

Credentials

Two of these are needed, one uses the storage account URL and the key that we used to connect to the blob container, the other one is to utilise the Shared Access Signature.

Julie Koesmarno wrote a nice post about this 5 years ago but her solution still works! So don’t hesitate to check out that entry of hers:

The T-SQL commands to create them:

|

1 2 3 4 5 6 7 8 9 |

--using the url and the key CREATE CREDENTIAL [Credential_BLOB] WITH IDENTITY= 'storage account url', SECRET = 'storage account key'; --using the Shared Access Signature (SAS) CREATE CREDENTIAL [Credential_Name_SAS] WITH IDENTITY = 'SHARED ACCESS SIGNATURE', SECRET = 'st=a very looooong key'; |

To find out the Shared Access Signature, the easiest way is to use Azure Storage Explorer:

The pop up window allows you to configure the permissions you can assign to whatever uses this new signature you are about to create (Read / Write / Delete / List) and you can specify if it is blob or container level (to create a container level SAS, right-click on the actual container will also provide the option of selecting the Get Shared Access Signature… option.

When it’s created you’ll receive the URL (which you know anyway) and the Query string that is used as the secret for the SHARED ACCESS SIGNATURE identity in the second SQL query above.

The ? is not needed at the beginning, just start from the st=

RESTORE

Then finally we have arrived to the last step: the actual restore! This is again done using T-SQL instead of a GUI-based approach as many things related to Azure don’t have a proper GUI yet.

|

1 2 3 4 |

RESTORE DATABASE Database_Name FROM URL = 'https://StorageAccount.blob.core.windows.net/Container/SampleDatabase.bak' WITH CREDENTIAL = 'Credential_BLOB', MOVE 'Database' to 'D:\DATABASES\SampleDatabase.mdf', MOVE 'Database_log' to 'M:\LOGS\SampleDatabase.ldf' |

And just when I thought I had it right… an error message popped up:

Msg 3268, Level 16, State 1, Line 17

Cannot use the backup file

‘https://StorageAccount.blob.core.windows.net/Container/SampleDatabase.bak’ because it was originally formatted with sector size 512 and is now on a device with sector size 65536.

Msg 3013, Level 16, State 1, Line 17

RESTORE DATABASE is terminating abnormally.

The key here is it was originally formatted with sector size 512 and is now on a device with sector size 65536.

There are two ways to solve it:

- on the source server back up the database again with the sector size of the target device (make it 65 536)

-

or in the restore command specify the BLOCKSIZE of the source environment (make it 512)

12345RESTORE DATABASE Database_Name FROM URL = 'https://StorageAccount.blob.core.windows.net/Container/SampleDatabase.bak'WITH CREDENTIAL = 'Credential_BLOB',MOVE 'Database' to 'D:\DATABASES\SampleDatabase.mdf',MOVE 'Database_log' to 'M:\LOGS\SampleDatabase.ldf',BLOCKSIZE = 512

That command now properly restores the database. Drumroll and fanfare! I can now see and query the database in SSMS!

References

- Azure Storage

- Azure Storage account options

- Azure Storage Cmdlets

- Get-AzureStorageBlobContent

- SQL Server Backup to URL

- CDS for apps in the [POWER WORLD] - November 19, 2018

- Dynamic Partitioning in Azure Analysis Services (tabular) - August 6, 2018

- Connecting to Azure SQL Database - May 23, 2018