In the first part of reviewing the basics of bulk insert, we looked at importing entire files, specifying delimiters for rows and columns, and bypassing error messages. Sometimes we’ll want to skip first and ending lines, log errors and bad records for review after inserting data, and work with data types directly without first importing using a varchar and converting to the data type later. In this part, we look at these techniques using T-SQL’s native bulk insert.

Skipping Lines and Working With Data Types

With many imports, the first row of data in the file will specify the columns of the file. We do not necessarily have to keep the column names when we use bulk insert to insert the data, but we should be careful about inserting data into a specific format, if we also insert the first row that doesn’t match that format. For an example of this, in the below code that creates the table and the below image of the file, we see that the first line of data from the file has values like SpefzA, SpefzB, SpefzC, SpefzD which don’t match the table’s data type (except in 2 cases). We also see that other values in the file don’t match those data either – for instance, the 0 or 1 of SpefzC looks like a bit and not a varchar. The file’s first line in this case tells us about the data in the 2nd row through the end of the file, so when we insert the data from the file, we’ll want to skip the first row.

|

1 2 3 4 5 6 |

CREATE TABLE etlImport5( VarcharSpefzTen VARCHAR(10), IntSpefz INT, BitSpefz BIT, VarcharSpefzMax VARCHAR(MAX) ) |

The first line in our file we want to exclude as it won’t match the above format of our table.

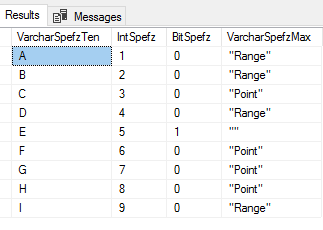

In the below bulk insert, we specify the file, comma as the column terminator (called FIELDTERMINATOR), and a new line character as the row terminator. We also add another option – the first row we specify as 2 (the default is the start of the file – row 1). This will skip the first line that we see in the above image and start where the A,1,0,”Range” line is. After our insert, we check our table with a select and see the data without the first row from the file.

|

1 2 3 4 5 6 7 8 9 10 |

BULK INSERT etlImport5 FROM 'C:\ETL\Files\Read\Bulk\daily_20180101.txt' WITH ( FIELDTERMINATOR = ',', ROWTERMINATOR = '\n', FIRSTROW = 2 ) SELECT * FROM etlImport5 |

Our bulk insert skipped the first line, which was only specifying the columns in the file.

In some cases, files have first rows simply specify what we’ll find in the file and we can skip this row by starting on row 2 (or if there are several rows to skip, we can start on the row 3, 4, etc). In rarer cases, we may come across files that have an ending line that doesn’t match the format at all. Sometimes these have a sentence with a statement about the end of the file, or in some cases, they may have a line that says something to the effect of “File created at 2018-01-01 at 12 AM.” Since these don’t match the file format at all, they would normally throw an error, but like we can specify the first row of a file, we can specify the last row of a file.

We add two lines – one that matches the format and the last line that is a sentence.

In our file, we’ll add two lines – one of the lines matches our current format with three commas separating data types that match our created table. The last line is a sentence with no delimiters and that doesn’t match our format. In the below code, well empty our table by truncating it and try to bulk insert our updated file without specifying and ending row and seeing the error. After that, we’ll bulk insert the file, but this time specifying that row 7 is the last row of data we want to insert (the bad row is on row 8).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

TRUNCATE TABLE etlImport5 --- Fails on last line BULK INSERT etlImport5 FROM 'C:\ETL\Files\Read\Bulk\daily_20180101.txt' WITH ( FIELDTERMINATOR = ',', ROWTERMINATOR = '\n', FIRSTROW = 2 ) --- Passes BULK INSERT etlImport5 FROM 'C:\ETL\Files\Read\Bulk\daily_20180101.txt' WITH ( FIELDTERMINATOR = ',', ROWTERMINATOR = '\n', FIRSTROW = 2, LASTROW = 7 ) SELECT * FROM etlImport5 |

Our first bulk insert fails because the ending line doesn’t match our format.

When we specify the last line of 7, which is the last line that matches our file’s format, the bulk insert passes.

While we won’t see ending lines like the above ending line above this, it’s useful to know that bulk insert can insert a range of data in a file, making leaving out an ending line number easy.

Logging Errors With Bulk Insert

As we looked at in the first part of this series, sometimes we will have data that don’t match our table’s format, even if the number of delimiters is present and we can specify a maximum error amount to allow the insert to continue happening, even if some lines don’t get added to our table. This might be easy when it’s one or two lines, but if we have a large file and we want to see a log of all the errors? In our example file, we’ll add some bad data to our file along with good data and test logging these erroneous rows of data.

We add three lines of bad data that we’ll be logging to review.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

TRUNCATE TABLE etlImport5 BULK INSERT etlImport5 FROM 'C:\ETL\Files\Read\Bulk\daily_20180101.txt' WITH ( FIELDTERMINATOR = ',', ROWTERMINATOR = '\n', FIRSTROW = 2, MAXERRORS = 100, ERRORFILE = 'C:\ETL\Files\Read\Bulk\daily_20180101_log' ) SELECT * FROM etlImport5 |

We see the three error messages from the three bad rows of data.

We still have the correct data inserted even with the errors.

Because we’ve allowed up to 100 errors and we only experienced 3 errors, bulk insert continued to insert data and we see the 9 rows of good data, even with the error message about the 3 bad records. We also specified an error file and when we look at our file location, we see two additionally created files – daily_20180101_log and daily_20180101_log.Error. The below images show these files in the directory and their contents (I opened these files in Notepad).

Two error files appear after bulk insert passes with three errors.

We see the error records with the latter one appearing due to end of file reasons.

We see the three error row numbers that were experienced during the bulk insert with details.

The general log file shows us the erroneous rows with the latter erroneous row appearing due to end of file reasons (if you add a row with the data J,10,0,”Range” and retest, you’ll only get three error rows because the last row of data is legitimate)The .Error file specifies the erroneous rows of data, in this example rows 8, 11 and 13 and gives us the type of problem with these rows of data as they relate to the table – HRESULT 0x80020005 – which indicates a type mismatch. When we review the file and table, we see that the type expected was a bit, but these values are a 2 and a bit can only be a 1 or 0. From a debugging perspective, this can save us a lot of time, as our data context may allow us to bypass some erroneous rows that we can quickly review along with seeing where they occurred in the file. It is worth noting here that in order to use the error file, the user executing this will need write ability to the file location, or another file location.

More important than understanding how to allow and log errors is recognizing when these are appropriate for our data context. As an example, if we’re testing a data set for validity, use or other purposes, we want the data even if it has some errors as we’re in the process of testing it. This is also true for other business use-cases where we may accept some bad data, if most of the data can be validated (for instance, manual data entry is error-prone, so with data types like this, we will expect a percent of data to be erroneous). On the other hand, there may be contexts where one or two erroneous rows will invalidate a data set and, in these situations, we can use the default option of zero maximum errors (no need to specify the parameter) and avoid logging them.

Summary

We’ve reviewed using bulk insert’s first and last row feature, which can be helpful when we have delimited files that have headers which map out the data, but which we don’t want to import due to being incompatible with our data structure. We’ve also seen how we can use error file parameter along with maximum errors to quickly review what failed with the bulk insert as well as where these failures exist in our file. Finally, we reviewed some thoughts about where the best context for this may be.

Table of contents

| T-SQL’s Native Bulk Insert Basics |

| Working With Line Numbers and Errors Using Bulk Insert |

| Considering Security with SQL Bulk Insert |

| SQL Bulk Insert Concurrency and Performance Considerations |

| Using SQL Bulk Insert With Strict Business Rules |

- Data Masking or Altering Behavioral Information - June 26, 2020

- Security Testing with extreme data volume ranges - June 19, 2020

- SQL Server performance tuning – RESOURCE_SEMAPHORE waits - June 16, 2020