This article talks about basic concepts of SQL unit testing from a Data Warehouse point of view using tSQLt, a highly productive and acclaimed SQL unit testing framework.

This article is both for beginners to learn the basics and experienced Data Warehouse professionals to review their Data Warehouse unit testing approach in favor of the tSQLt framework.

Additionally, the readers of this article are going to learn the basic tips of applying tSQLt in the SQL unit testing of Data Warehouse Extracts alongside a quick overview of Data Warehouse concepts.

About Data Warehouse

It is very important to first get familiar with Data Warehouse concepts if you are not already familiar.

Bear in mind that Data Warehouse is a massive subject that cannot be covered in one or two articles. However, it is not difficult to get an overview of Data Warehouse keeping in mind the scope of this article.

What is Data Warehouse?

Data Warehouse is a centralized repository or storage of data that is highly optimized for reporting and analysis purposes.

What does Data Warehouse do?

Data Warehouse helps businesses to understand their weaknesses and strengths by providing deep insights into their data by using special storage, architecture and processes.

What else Data Warehouse does?

Data Warehouse also helps in making quick decisions based on the facts provided by it, thereby making business more productive and less susceptible.

Types of Data Warehouse

A traditional Data Warehouse can be built in the form of cubes or data models that can be accessed for reporting and real-time analysis by the business users.

Dimension and facts

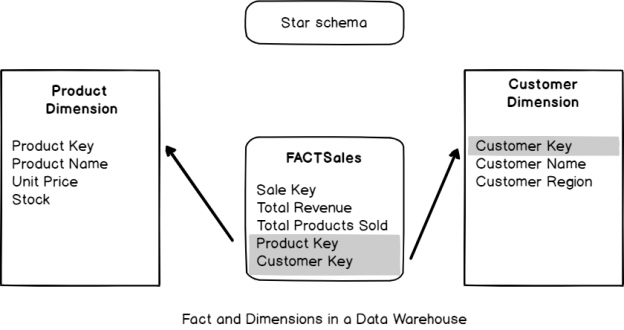

A dimension is anything that is of interest to the business such as Product, Customer or Supplier, whereas FACT, as the name indicates, contains facts and figures alongside keys to link with dimensions.

The architecture of Data warehouse

A typical Data Warehouse either follows a star-like schema where a FACT is surrounded by dimensions (like a star) or a snowflake-like schema where a dimension can be directly linked with Fact or can also be linked with another dimension. However, their further details are beyond the scope of this article:

Data Warehouse Staging Extracts

Let us now talk about Data Warehouse Extracts, particularly staging extracts.

What are Data Warehouse Staging Extracts?

Data Warehouse staging extract is basically a process that copies data from source to Data Warehouse Staging Area where it becomes available for further processing into dimensions and fact(s).

What is Staging Area?

Staging from a Data Warehouse perspective is a one-to-one mapping of data from source to destination where data from multiple sources is stored to be processed further.

Example of Staging

For example, if you have a table named Customer, which consists of only two columns CustomerId and Name, then this table must also be present in staging with the same columns to be populated from the source or multiple sources.

Benefits of Staging Area

The main benefit of the Staging Area is the independence from the source once the data is extracted, which means the Data Warehouse further processing does not need to refer to the original source as long as its staging extract has been captured.

Importance of Staging Extract

The most important part of the initial phase of Data Warehouse processing is the staging extract because this is the entrance to the Data Warehouse database, which then serves the Data Warehouse business intelligence needs.

SQL Unit Testing Staging Extract

As mentioned earlier, staging extract(s) is the most important starting point as far as Data Warehouse workflows are concerned, so we should be then somehow SQL unit testing these extracts.

However, it is also crucial to identify what needs to be unit tested and what can be excluded from SQL Unit Testing.

We are first going to clarify the following two things in the context of SQL Unit Testing Data Warehouse Extracts:

- What is that we are going to write our SQL Unit Tests against?

- What SQL Unit Testing tool or framework is going to be used?

Staging Extract and Source Script

The process of running staging extract (copying data from source to destination) typically depends on the source script, which runs against the source database to extract data, which is then copied over to the staging area.

A traditional source script selects some or all the columns of a table from source (database).

So, the source script is actually the potential object against which we are going to write and run our SQL Unit Tests.

TSQLt – SQL unit testing framework

The most suitable testing framework for SQL Unit Testing Data Warehouse Staging Extract is tSQLt, which is, by default, purpose-built and feature-rich.

Please read the following articles to get started with tSQLt if you are not already familiar with it:

- tSQLt – A Forgotten Treasure in Database Unit Testing

- Fundamentals of Test-Driven Database Development (TDDD) with tSQLt unit testing

- Three Standard SQL Unit Tests you can write against any Stored Procedure

Replicating SQL Unit Testing scenario

As already mentioned in this article that a Data Warehouse extract runs between source and Data Warehouse database, then it is easy to guess that at least two sample databases are required to implement the walkthrough to get a better understanding.

Setup source sample database

Let us first setup source sample database called SQLDevArticlesV4 by running the following T-SQL script against the master database:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

-- 1 Create SQLDevArticlesV4 source database CREATE DATABASE SQLDevArticlesV4; GO USE SQLDevArticlesV4; GO -- 2 Create author table CREATE TABLE [dbo].[Author] ( [AuthorId] INT IDENTITY (1, 1) NOT NULL, [Name] VARCHAR (40) NOT NULL, [RegistrationDate] DATETIME2 (7) NULL ); -- 3 Create article tables CREATE TABLE [dbo].[Article] ( [ArticleId] INT IDENTITY (1, 1) NOT NULL, [Title] VARCHAR (300) NOT NULL, [Published_Date] DATETIME2 (7) NOT NULL ); -- 4 Populate author table SET IDENTITY_INSERT [dbo].[Author] ON INSERT INTO [dbo].[Author] ([AuthorId], [Name], [RegistrationDate]) VALUES (1, N'Asif', N'2018-01-01 00:00:00') INSERT INTO [dbo].[Author] ([AuthorId], [Name], [RegistrationDate]) VALUES (2, N'Peter', N'2018-02-01 00:00:00') INSERT INTO [dbo].[Author] ([AuthorId], [Name], [RegistrationDate]) VALUES (3, N'Sarah', N'2018-03-02 00:00:00') INSERT INTO [dbo].[Author] ([AuthorId], [Name], [RegistrationDate]) VALUES (4, N'Adil', N'2018-04-02 00:00:00') INSERT INTO [dbo].[Author] ([AuthorId], [Name], [RegistrationDate]) VALUES (5, N'Sam', N'2019-01-01 00:00:00') SET IDENTITY_INSERT [dbo].[Author] OFF -- 5 Populate article table SET IDENTITY_INSERT [dbo].[Article] ON INSERT INTO [dbo].[Article] ([ArticleId], [Title], [Published_Date]) VALUES (1, N'Fundamentals of Database Programming', N'2018-01-02 00:00:00') INSERT INTO [dbo].[Article] ([ArticleId], [Title], [Published_Date]) VALUES (2, N'Advanced Database Programming', N'2018-01-03 00:00:00') INSERT INTO [dbo].[Article] ([ArticleId], [Title], [Published_Date]) VALUES (3, N'Understanding SQL Stored Procedures ', N'2018-02-02 00:00:00') INSERT INTO [dbo].[Article] ([ArticleId], [Title], [Published_Date]) VALUES (4, N'Database Design Concepts', N'2018-03-02 00:00:00') INSERT INTO [dbo].[Article] ([ArticleId], [Title], [Published_Date]) VALUES (5, N'Power BI Desktop Fundamentals', N'2019-01-02 00:00:00') SET IDENTITY_INSERT [dbo].[Article] OFF; GO |

Setup Data Warehouse sample database

Once the source database sample is set up the next step is to create the Data Warehouse database SQLDevArticlesDW sample with staging area (schema) and tables similar to the ones in source by running the following T-SQL script:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

-- 1 Create SQLDevArticlesDW database CREATE DATABASE SQLDevArticlesDW; GO USE SQLDevArticlesDW; GO -- 2 Create Staging schema CREATE SCHEMA Staging AUTHORIZATION dbo; GO -- 2 Create staging author table CREATE TABLE [Staging].[Author] ( [AuthorId] INT IDENTITY (1, 1) NOT NULL, [Name] VARCHAR (40) NOT NULL, [RegistrationDate] DATETIME2 (7) NULL ); -- 3 Create article tables CREATE TABLE [Staging].[Article] ( [ArticleId] INT IDENTITY (1, 1) NOT NULL, [Title] VARCHAR (300) NOT NULL, [Published_Date] DATETIME2 (7) NOT NULL ); |

Quick check

Have a quick look at both source and Data Warehouse sample (database) that you have just created:

What is next

We have both samples ready to be used, so could you please guess what next step is?

Well, the answer is to think about the potential object, which helps us to get the source data to be copied over to Data Warehouse.

Those who are already familiar with Data Warehouse practices are well aware that we actually need here Integration Services Packages also called SSIS Packages to perform this Data Warehouse act, but we are more focused to author (build) an object which sources the required data which can then be initiated or activated via SSIS Packages and for which SQL Unit Testing can be applied.

Analyze source script

Analyze the source script for author extract, which is simply selecting all the columns from author tables from the source database as follows:

|

1 2 3 4 |

USE SQLDevArticlesV4 --Analyse auhtor extract source script SELECT a.[AuthorId],a.[Name],a.[RegistrationDate] FROM dbo.Author a |

The output is as follows:

Source script mapping options

Please remember you can map this simple source script to any one of the following objects in the database:

- SQL view

- Stored procedure

Creating GetAuthorExtract object from source script

We are going to create a stored procedure called GetAuthorExtract (database) in the source database as follows:

|

1 2 3 4 5 6 7 8 |

USE SQLDevArticlesV4; GO --Create GetAuthorExtract procedure CREATE PROCEDURE GetAuthorExtract AS SELECT a.[AuthorId],a.[Name],a.[RegistrationDate] FROM dbo.Author a RETURN 0 |

Test run the procedure

Go for a quick test run of the stored procedure as follows:

|

1 2 3 4 5 6 |

USE [SQLDevArticlesV4] GO -- Run author staging extract stored procedure EXEC [dbo].[GetAuthorExtract] GO |

The results should match the output shown below:

So now, you are ready to write a tSQLt Unit Test against this procedure because this is actually the object of interest that takes data from the source and loads it into the destination and we would like to make sure that it must pass SQL unit test to prove it serves the purpose.

Setup tSQLt framework

Run tSQLt setup (by running tSQLt.class.sql script you get when you download it) to install the framework in the same source database (SQLDevArticlesV4) where GetAuthorExtract stored procedure was created.

Please read the article Conventional SQL Unit Testing with tSQLt in Simple Words to install tSQLt if you are not familiar with tSQLt installation.

However, I strongly recommend to please use a test-driven database development approach in your day to day professional SQL unit testing work.

Quick check

In Object Explorer, navigate to SQLDevArticlesV4 | Tables node to view the tSQLt installed objects:

Create unit test class

You have to create a new class (schema) for SQL unit testing with tSQLt as follows:

|

1 2 3 4 5 6 7 8 |

USE SQLDevArticlesV4; GO -- Creating unit test class ArticleTests CREATE SCHEMA [AuthorTests] Authorization dbo GO EXECUTE sp_addextendedproperty @name='tSQLt.TestClass',@value=1,@level0type='SCHEMA',@level0name='AuthorTests' |

Create a unit test to check GetAuthorExtract works

In order to make sure that the procedure which extracts data from source works, we have to create a SQL Unit Test, which helps us to determine the reliability of the procedure prior to running it to copy the data from source to Data Warehouse.

Create a SQL Unit Test to check GetAuthorExtract object as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

USE SQLDevArticlesV4 GO --Create unit test to check GetAuthorExtract works ALTER PROCEDURE [AuthorTests].[test to check GetAuthorExtract pulls all data] AS -- Assemble EXEC tSQLt.FakeTable @TableName='dbo.Author',@Identity=0 -- Fake Author table Create TABLE [AuthorTests].[Expected] -- Create expected table ( [AuthorId] INT NOT NULL, [Name] VARCHAR (40) NOT NULL, [RegistrationDate] DATETIME2 (7) NULL ) INSERT INTO AuthorTests.Expected -- Insert data into exepcted table (AuthorId,Name,RegistrationDate) VALUES (1,'Asim','01 Jul 2019'), (2,'Ali','01 Nov 2019'), (3,'Mike','02 Dec 2019') SELECT * INTO AuthorTests.Actual FROM dbo.Author -- Create an actual table from the Author table (no data) INSERT INTO dbo.Author -- Insert data into Author table (AuthorId,Name,RegistrationDate) VALUES (1,'Asim','01 Jul 2019'), (2,'Ali','01 Nov 2019'), (3,'Mike','02 Dec 2019') -- Act INSERT INTO AuthorTests.Actual -- Insert data into an actual table by running the stored procedure (AuthorId,Name,RegistrationDate) EXEC dbo.GetAuthorExtract -- Assert (compare expected table with actual table results) EXEC tSQLt.AssertEqualsTable @Expected='AuthorTests.Expected',@Actual='AuthorTests.Actual' |

Run SQL Unit Test to check GetAuthorExtract

Finally, run the SQL unit test by the following command:

|

1 2 3 4 5 |

USE SQLDevArticlesV4 GO -- Run all unit tests related to AuthorTests test class EXEC tsqlt.RunTestClass "AuthorTests" |

If you have followed all the steps of the walkthrough then we are expecting this SQL Unit Test to pass as shown below:

Congratulations! You have just learned how to write and run SQL Unit Test against Data Warehouse staging extract and this is also applicable to any other similar scenario where data needs to be extracted from source to destination.

Table of contents

- How to create and query the Python PostgreSQL database - August 15, 2024

- SQL Machine Learning in simple words - May 15, 2023

- MySQL Cluster in simple words - February 23, 2023