This article will help you learn to develop U-SQL jobs locally, which once ready, can be deployed on Azure Data Lake Analytics service on the Azure cloud.

Introduction

In the previous article, Developing U-SQL jobs on Azure Data Lake Analytics, we learned to develop an Azure Data Lake Analytics job that can read data from files stored in a data lake storage account, process and same and write the output to a file. We also learned how to optimize the performance of the job. Now that we understand the basic concepts of working with these jobs, let’s say we are considering using this service for a project in which multiple developers would be developing these jobs on their local workstations. In that case, we need to enable the development team with the tools that they can use to develop these jobs. They can also develop these jobs using the console, but often that is the not most efficient approach. And the web console does not have full-fledged features that often locally installed IDEs have to support large scale code development.

Setting up sample data in Azure Data Lake Storage Account

While performing development locally, one may need test data on the cloud as well on the local machine. We will explore both options. In this section let’s look at how to set up some sample data that can be used with U-SQL jobs.

Navigate to the dashboard page of the Azure Data Lake Analytics account. On the menu bar, you would find an option named Sample Scripts as shown below.

Click on the Sample Scripts menu item, as a screen would appear as shown below. There are two options – one to install sample data and the second is to install the U-SQL advanced analytics extensions that allow us to use languages like R, Python etc.

Click on the sample data warning icon, which will start copying sample data on the data lake storage account. Once done, you would be able to see a confirmation message as shown below. This completes the setting up of sample data on the data lake storage account.

Setting up a local development environment

Visual Studio Data Tools provides the development environment as well as project constructs to develop U-SQL jobs as well as projects related to Azure Data Lake Analytics. It is assumed that you have Visual Studio installed on your local machine. If not, consider installing at least a community edition of Visual Studio which is available freely for development purposes. Once Visual Studio is installed, we can configure different component installation. Open the component configuration page and you would be able to see different component options that you can optionally install on your local machine.

Select Data storage and processing toolset as shown below. On the right-hand side, if you check the details, you would find that this stack contains the Azure Data Lake and Stream Analytics Tools, which is the set of tools and extensions that we need for developing projects related to Azure Data Lake Analytics.

Once it is installed, you should be able to see the Data Lake menu under the File menu as shown below. You would find different options under the Data Lake menu, but the main item that wraps up all the features is the Data Lake Analytics Explorer menu.

Open the Data Lake Explorer menu item. It would ask for login credentials. Provide the credentials of your Azure account in which you have created the data lake analytics account. Once you are connected, you should be able to see the sample data as well as other files that we created in the previous articles as shown below.

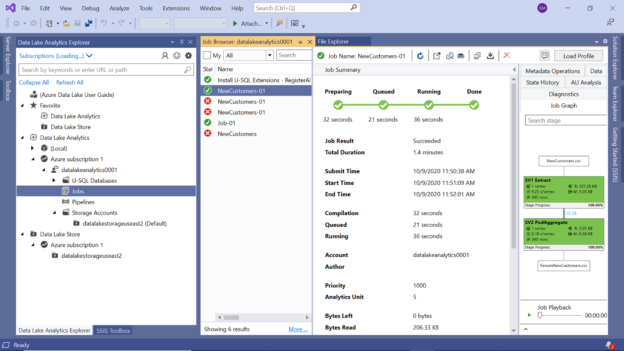

Click on the Jobs menu item in the left pane, and on the right pane, you should be able to see all the job execution history. Click on any of the jobs, and it would show you the same set of tabs that we had explored in the previous part of this series like Diagnostics, AU Analysis, Job Graph, etc. as shown below.

Let’s say that different team members are working on different jobs, and some jobs may be scheduled for execution as well or some jobs may be queued up already. As this IDE is connected live to the Azure data lake analytics account, you would be able to see any live U-SQL jobs going on as well as shown below.

Developing and executing U-SQL Jobs locally

Let’s say that we are tasked with creating a new project where we have to develop many U-SQL jobs, that we have to develop and test locally, and once it starts working as expected, the same can be deployed on the Azure Data Lake Analytics account.

As a first step, we need to create a new project related to Azure data lake analytics and create new U-SQL jobs under it. Our focus is to understand how to create a new project and execute it, as we already know how to work with jobs. Open the File menu and click on New Project and type U-SQL as shown below. You can create different types of U-SQL projects, but one of our interests is the U-SQL sample application as shown below. This project has a few ready-to-use scripts as well as test data. Provide an appropriate name and create the project.

Once the project is created, you would be able to see the home page as shown below. You can find the U-SQL script files in the solution explorer pane. If you read the documentation, you would find the instructions to set up the test data which the scripts are using. Follow instructions and configure the test data as explained in Step 1.

Open the first U-SQL script file as shown below. You can analyze the script mentioned below. It’s a very simple script that reads the test data file from the directory that you configured earlier, and it writes the same to an output file. One thing to note is the source file is in TSV format and the output file is in CSV format.

Start the job execution by clicking on the Start button from the toolbar. Once the execution starts, you should be able to see the compile view of the job. The job gets compiled first and executed later. You can see the job graph too that got generated from the U-SQL script. It does not have any statistics as it is the compile-time view.

Once the compilation completes and execution starts, you may see a pop-up window as shown below that shows the

execution statistics of the job. After the job completes, you would be able to see the colors in the job graph as

well.

Once the job executes successfully, the output file would get generated. Let’s say that we intend to verify the source and destination files. Navigate to the source file from the test data directory that you set up earlier. The data in the source TSV file would look as shown below.

And if you navigate to the output CSV file that the job generated, it would look as shown below.

In this way by using Visual Studio, without incurring a cost for executing U-SQL jobs during the development phase, where developers often execute the same job multiple times to test their logic, developers can develop U-SQL jobs locally for Azure Data Lake Analytics.

Conclusion

In this article, we started setting up sample data on the Azure Data Lake Storage account. Then we installed Visual Studio with Azure Data Lake Analytics related extensions. We connected Azure Data Lake Analytics Explorer to our Azure account and explored all the features to monitor and operate U-SQL jobs from Visual Studio locally. We created a U-SQL project with sample scripts as well as sample data, and successfully executed sample scripts without using any cloud resources for local development.

Table of contents

- Finding Duplicates in SQL - February 7, 2024

- MySQL substring uses with examples - October 14, 2023

- MySQL group_concat() function overview - March 28, 2023