We have some custom files that we receive from different providers and for these situations we are unable to use standard ETL programs without any customization. Since we’re expanding our ability to read these custom files with .NET, we’re looking for efficient ways to read files with PowerShell that we can use in SQL Server Job Agents, Windows Task Schedulers, or with our custom program, which can execute PowerShell scripts. We have many tools for parsing data and wanted to know efficient ways of reading the data for parsing, along with getting specific lines of data from files by number, or by the first or last line of the file. For reading files efficiently, what are some functions or libraries we can use?

Overview

For reading data from files, we generally want to focus on three major functions for completing these tasks along with some examples listed next to them of these in practice:

- How to read an entire file, part of a file or skip around in a file. We may face a situation where we want to read every line except the first and last.

- How to read a file by using few system resources. We may have a 100GB file that we only want to read 108KB worth of data.

- How to read a file in a manner that easily allows us to parse data we need or allows us to use functions or tools we use with other data. Since many developers have string parsing tools, moving data to a string format – if possible – allows us to re-use many string parsing tools.

The above applies to most situations involved with parsing data from files. We’ll start by looking at a built-in PowerShell function for reading data, then look at a custom way of reading data from files using PowerShell.

PowerShell’s Get-Content function

The latest version of PowerShell (version 5) and many earlier versions of PowerShell come with the Get-Content function and this function allows us to quickly read a file’s data. In the below script, we output an entire file’s data on the PowerShell ISE screen – a screen which we’ll be using for demonstration purposes throughout this article:

|

1 |

Get-Content "C:\logging\logging.txt" |

We can save this entire amount of data into a string, called ourfilesdata:

|

1 2 |

$ourfilesdata = Get-Content "C:\logging\logging.txt" $ourfilesdata |

We get the same result as the above, the only difference here is that we’ve saved the entire file into a variable. We face one drawback to this though, if we save an entire file to a variable or if we output an entire file: if the file size is large, we’ll have read the entire file into variable or output the entire file on the screen. This begins to cost us performance, as we deal with larger file sizes.

We can select a part of the file by treating our variable (object being another name) like a SQL query where we select some of the files instead of all of it. In the code below, we select the first five lines of the file, rather than the entire file:

|

1 2 |

$ourfilesdata = Get-Content "C:\logging\logging.txt" $ourfilesdata | Select-Object -First 5 |

We can also use the same function to get the last five lines of the file, using a similar syntax:

|

1 2 |

$ourfilesdata = Get-Content "C:\logging\logging.txt" $ourfilesdata | Select-Object -Last 5 |

PowerShell’s built-in Get-Content function can be useful, but if we want to store very little data on each read for reasons of parsing, or if we want to read line by line for parsing a file, we may want to use .NET’s StreamReader class, which will allow us to customize our usage for increased efficiency. This makes Get-Content a great basic reader for file data.

The StreamReader library

In a new PowerShell ISE window, we’ll create a StreamReader object and dispose this same object by executing the below PowerShell code:

|

1 2 3 |

$newstreamreader = New-Object System.IO.StreamReader("C:\logging\logging.txt") ### Reading file stuff here $newstreamreader.Dispose() |

In general, anytime we create a new object, it’s a best practice to remove that object, as it releases computing resources on that object. While it’s true that .NET will automatically do this, I still recommend doing this manually, as you may work with languages that don’t automatically do this in the future and it’s a good practice.

Nothing happens when we execute the above code because we’ve called no method – we’ve only created an object and removed it. The first method we’ll look at is the ReadToEnd() method:

|

1 2 3 |

$newstreamreader = New-Object System.IO.StreamReader("C:\logging\logging.txt") $newstreamreader.ReadToEnd() $newstreamreader.Dispose() |

As we see in the output, we can read all the data from the file like with Get-Content using the ReadToEnd() method; how do we read each line of data? Included in the StreamReader class is the ReadLine() method and if we called it instead of ReadToEnd(), we would get the first line of our files data:

|

1 2 3 |

$newstreamreader = New-Object System.IO.StreamReader("C:\logging\logging.txt") $newstreamreader.ReadLine() $newstreamreader.Dispose() |

Since we told the StreamReader to read the line, it read the first line of the file and stopped. The reason for this is that the ReadLine() method only reads the current line (in this case, line one). We must keep reading the file until we reach the end of the file. How do we know when a file ends? The ending line is null. In other words, we want the StreamReader to keep reading the file (while loop) as long as each new line is not null (in other words, has data). To demonstrate this, let’s add a line counter to each line we iterate over so that we can see the logic with numbers and text:

|

1 2 3 4 5 6 7 8 |

$newstreamreader = New-Object System.IO.StreamReader("C:\logging\logging.txt") $eachlinenumber = 1 while (($readeachline =$newstreamreader.ReadLine()) -ne $null) { Write-Host "$eachlinenumber $readeachline" $eachlinenumber++ } $newstreamreader.Dispose() |

As StreamReader reads each line, it stores the line’s data into the object we’ve created $readeachline. We can apply string functions to this line of data on each pass, such as getting the first ten characters of line of data:

|

1 2 3 4 5 6 7 |

$newstreamreader = New-Object System.IO.StreamReader("C:\logging\logging.txt") $eachlinenumber = 1 while (($readeachline = $newstreamreader.ReadLine()) -ne $null) { $readeachline.Substring(0,10) } $newstreamreader.Dispose() |

We can extend this example and call two other string methods – this time including the string methods IndexOf and Replace(). We only call these methods on the first two lines by only getting the lines less than line three:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

$newstreamreader = New-Object System.IO.StreamReader("C:\logging\logging.txt") $eachlinenumber = 1 while (($readeachline = $newstreamreader.ReadLine()) -ne $null) { if ($eachlinenumber -lt 3) { Write-Host "$eachlinenumber" $readeachline.Substring(0,12) $readeachline.IndexOf(" ") $readeachline.Replace(" ","replace") } $eachlinenumber++ } $newstreamreader.Dispose() |

For parsing data, we can use our string methods on each line of the file – or on a specific line of the file – on each iteration of the loop.

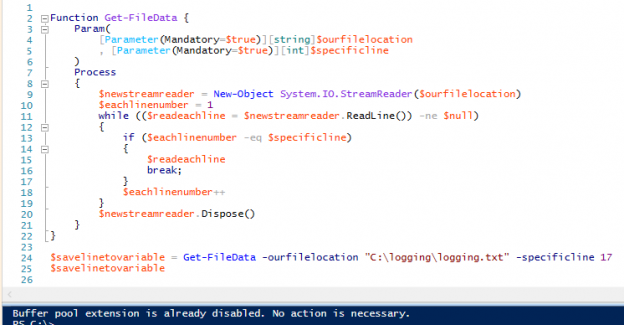

Finally, we want to be able to get a specific line from the file – we can get the first and last line of the file by using Get-Content. Let’s use StreamReader to bet a specific line number that we pass to a custom function that we create. We’ll create a reusable function that returns a specific line number, next we want to wrap our function for re-use while requiring two inputs: the file location and the specific line number we want to return.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

Function Get-FileData { Param( [Parameter(Mandatory=$true)][string]$ourfilelocation , [Parameter(Mandatory=$true)][int]$specificline ) Process { $newstreamreader = New-Object System.IO.StreamReader($ourfilelocation) $eachlinenumber = 1 while (($readeachline = $newstreamreader.ReadLine()) -ne $null) { if ($eachlinenumber -eq $specificline) { $readeachline break; } $eachlinenumber++ } $newstreamreader.Dispose() } } $savelinetovariable = Get-FileData -ourfilelocation "C:\logging\logging.txt" -specificline 17 $savelinetovariable |

If we check, line 17 returns “Buffer pool extension is already disabled. No action is necessary.” correctly. In addition, we break the if statement – as there’s no need to continue reading the file once we obtain the line of data we want. Also, the dispose method does end the object, as we can check by calling the method from the command line in PowerShell ISE and it will return nothing (we could also check within the function and get the same result):

Final thoughts

For custom performance, StreamReader offers more potential, as we can read each line of data and apply our additional functions as we need. But we don’t always need something to customize and we may only want to read a few first and last lines of data, in which case the function Get-Content suits us well. In addition, smaller files work well with Get-Content as we’re never getting too much data at a time. Just be careful if the files tend to grow in time.

- Data Masking or Altering Behavioral Information - June 26, 2020

- Security Testing with extreme data volume ranges - June 19, 2020

- SQL Server performance tuning – RESOURCE_SEMAPHORE waits - June 16, 2020