The SQL Server transaction log is akin to a ‘Black box’ in an airliner. It contains all of the records of transactions made against a database. This information is a proverbial goldmine for database audits, recoveries etc but it was never meant to be exposed to end users let alone visualized in an easy to read manner nor used for DBA tasks. As such, utilizing this information can be a challenge, to say the least.

To illustrate, let’s open up a LDF file in a hex editor and see what’s inside. As you can see, this is data never meant to be processed directly by humans, at least

Despite the challenges inherent in the data format of LDF and TRN files there are some solutions to de-mystify this information, un-obfuscating it to a point where it can begin to be useful. We’ll examine a few of these in this article

Using fn_dblog

fn_dblog is a SQL Server function, albeit undocumented, that can be utilized to decrypt the active region of an online SQL Server transaction log

Let’s take a walk through this solution and examine the results

-

Run fn_dblog

1Select * FROM sys.fn_dblog(NULL,NULL)The results include 129 columns, by default. So our next step will be to narrow down the result set to something more manageable, like for a certain transaction type, specifically e.g. Inserts

-

Narrow results to match our search

So let’s drill down into the results to get a more precise set of information

To see transactions for inserted rows, run:

123456789SELECT [Current LSN],Operation,Context,[Transaction ID],[Begin time]FROM sys.fn_dblog(NULL, NULL)WHERE operation IN('LOP_INSERT_ROWS');

Similarly, we can do the same for deleted rows

12345678SELECT [begin time],[rowlog contents 1],[Transaction Name],OperationFROM sys.fn_dblog(NULL, NULL)WHERE operation IN('LOP_DELETE_ROWS');

-

Find the appropriate columns that store the values

To find the columns that store values for inserted or deleted rows, look for these headers ‘RowLog Contents 0’, ‘RowLog Contents 1’, ‘RowLog Contents 2’, ‘RowLog Contents 3’, ‘RowLog Contents 4’, ‘Description’ and ‘Log Record’

The challenge is that data is scattered across different columns for different operations. This requires the user to map the columns for each transaction type. Since this is an undocumented function, no such map or documentation exists, making reading the proverbial tea leaves that are the results of fn_dblog quite difficult.

To compound the difficulty further, rows for deleted and inserted data are presented as hexadecimal. Converting this data to meaningful information requires deep knowledge of the format, status bits, total columns etc. Reading Egyptian hieroglyphics might be easier in some cases

-

Convert the data to usable information

To complete our process, we must convert the binary data to textual/table data taking into consideration the data type of each column. Conversion mechanisms will vary depending on the column, data type etc

Fn_dbLog is a very powerful and useful tool but it does require an expert level user to be utilized to its full extent. And even so it has some quite significant limitations including

- DDL auditing is complicated and involves reconstructing the state of certain system tables

- Only the active part of the online transaction log can be read

- There is no means to reconstruct Update DML transactions or BLOBs (see next)

Update operations in SQL Server are not fully logged in the transaction log. Full before-and-after values, unfortunately don’t exist, only the delta of the change for that record. For example, SQL Server may show a change from “H” to “M” when the actual record that was changed was from “House” to “Mouse”. To piece together the full picture a process must be devised to manually reconstruct the history of changes, including the state of the record prior to update. This requires painstakingly re-constructing every record from the original insert to the final update, and everything in between.

BLOBs are another challenge when trying to use fn_dblog to read transaction history. BLOBs, when deleted, are never inserted into the transaction log. So examining the transaction log won’t provide information about its existence unless the original insert can be located. But only by combining these two pieces of data will you be able to recover a deleted BLOB file. This obviously requires that the original insert exists in the active/online portion of the transaction log, the only part accessible to fn_dblog. This may be problematic if the original insert was done some weeks, months or years earlier and the transaction log has been subsequently backed up or truncated

Using fn_dump_dblog

Another solution exists, in the form of fn_dump_dblog that overcomes the limitation of fn_dblog, in that it can only read the active/online transaction log.

Fn_dump_dblog is able to read native transaction log backups

Please keep in mind that this function is also undocumented

-

Run fn_dump_dblog for a specific transaction log backup.

Please note that you have to specify all parameters, 63 in this case

1234567891011SELECT *FROM fn_dump_dblog(NULL,NULL,N'DISK',1,N'F:\Mybackups\InventoryDatabase_4589725r.trn',DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT);The same as with fn_dbLog, 129 columns are returned, so returning only the specific ones is recommended

1234567891011SELECT [Current LSN], Context, [transaction name], Operation, [Transaction ID], DescriptionFROM fn_dump_dblog(NULL,NULL,N'DISK',1,N' F:\Mybackups\InventoryDatabase_4589725r.trn',DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT);Once again we need to dust off our hex editor to decipher this information and we have similar challenges and obstacles with Updates and BLOBs

But using this function, there is a potentially very helpful and powerful feature available as you are able to restore a database, to a specific point in time, before a particular operation occurred. By doing this, in essence, you could “recover” from a bad/rogue transaction by going back in time and eliminating it

To do this you can follow these steps

- Determine the LSN for the particular transaction you want to “reverse”

- Convert this LSN into the format used in the WITH STOPBEFOREMARK = ‘<mark_name>’ clause, For example, 00000070:00000011:0001 should be transformed into 112000000001700001

-

Restore the full chain of transaction log backups until you reach the time when the transaction(s) occurred. Use the WITH STOPBEFOREMARK = ‘<mark_name>’ clause to specify the previously obtained referencing transaction LSN

123456RESTORE LOG InventoryDatabaseFROMDISK = N' F:\Mybackups\InventoryDatabase_4589725r.trn'WITHSTOPBEFOREMARK = 'lsn:112000000001700001',NORECOVERY;

Using DBCC PAGE

Next on our list is DBCC PAGE, another useful command to read the SQL Server transaction log, which is able to read only MDF and LDF database files. Although, like the others it is also undocumented

|

1 |

DBCC PAGE ( {'dbname' | dbid}, filenum, pagenum [, printopt={0|1|2|3} ]) |

Use the following syntax to read the first page in our example database’s online transaction log:

|

1 2 3 |

SELECT FILE_ID ('InventoryDatabase_Log') AS 'File ID' -- to determine Log file ID = 2 DBCC PAGE (InventoryDatabase, 2, 0, 2) |

The results will be as follows

|

1 2 |

DBCC execution completed. If DBCC printed error messages, contact your system administrator. |

The output is not displayed, by default unless you turn on the 3604 trace flag first. Once set the output will be displayed

|

1 |

DBCC TRACEON (3604, -1) |

Now re-execute

|

1 |

DBCC PAGE (InventoryDatabase, 2, 0, 2) |

The result is quite a bit of errors, bad headers and other visual noise. This can be ignored but the results will still be in hexadecimal format

Hexadecimal output is really not meant to be interpreted by humans, visually, which makes converting this data into meaningful decisions difficult

Use ApexSQL Log

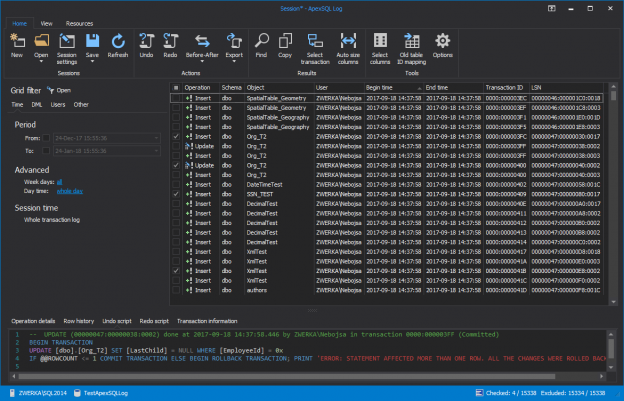

ApexSQL Log is a well-known, 3rd party SQL Server transaction log reader, auditing and recovery tool. It can read online, detached transaction logs as well as transaction log backups, including compressed.

ApexSQL Log can read all transactions, including DDL and DML, in a chain of transaction log data sources and create scripts to Undo or Redo them. It can even read transactions before the product was installed.

ApexSQL Log, unlike the aforementioned solutions, provides significant value added capabilities to log reading, by processing encrypted and obfuscated log data into easily processed and understood information, including the object, dates and times, and change history including before and after values

To use this tool, follow these steps

- Start the ApexSQL Log application

-

Connect to a database. Normally this is the database who’s transaction logs you want to read, but it doesn’t have to be necessarily, as you can add offline and backed up logs for other databases

-

Select the logs you want to read in the Select datasources step ensuring to construct a full chain. Use the Add button to add other datasources like detached LDF files and transaction log backups

-

Select the output type, in the next step. To view the data in a grid, select Open results in grid. This will populate the ApexSQL Log visual grid with the contents of the transaction log, in an easy to read manner and offer lots of valued added processing features like sorting, search etc

-

To refine your results, use the Filter setup, to filter by operation type e.g. DML, object or user name, time ranges and more

-

Click the Finish button

Detailed results, but without the visual noise and extraneous data, are presented in an easy to understandable and easy to use manner

Now you can really decipher the transaction log and unlock its true value. You will be able to see the time the operation began and ended, the operation type, the schema and object name of the object affected, the name of the user who executed the operation, the computer and application used to execute the operation. For UPDATEs, you’ll see the old and the new value of the updated fields aka before-and-after auditing.In addition, you can use ApexSQL Log, for disaster recovery purposes, to easily create Undo scripts to reverse batches of transactions or Redo scripts to replay them.

ApexSQL Log can be set up, in continuous auditing mode, to run unattended to continuously write the transaction log back to the database itself, with no gaps in records or duplicated records, so the transaction log data can be queried just like the database

For database replication, ApexSQL Log can easily be configured to read the transactions from one database and write to another

To avoid hex values, undocumented functions, unclear column content, long queries, complex action steps, incomplete UPDATE and BLOB reconstruction when reading SQL Server transaction logs, use ApexSQL Log. It will read transaction logs for you and present the results “in plain English”. Besides that, undo and redo scripts, database replication and continuous auditing are just a click away

- Various techniques to audit SQL Server databases - July 5, 2018

- Reading the transaction log in SQL Server – from hacks to solutions - July 3, 2018

- Creating reports based on existing stored procedures with SQL Server Reporting Services - November 10, 2016