CHECKSUM is an option for page verification that is available at the database instance level and we can see what level of verification each of our databases are currently using by the following query:

|

1 2 3 |

SELECT name, page_verify_option_desc FROM sys.databases |

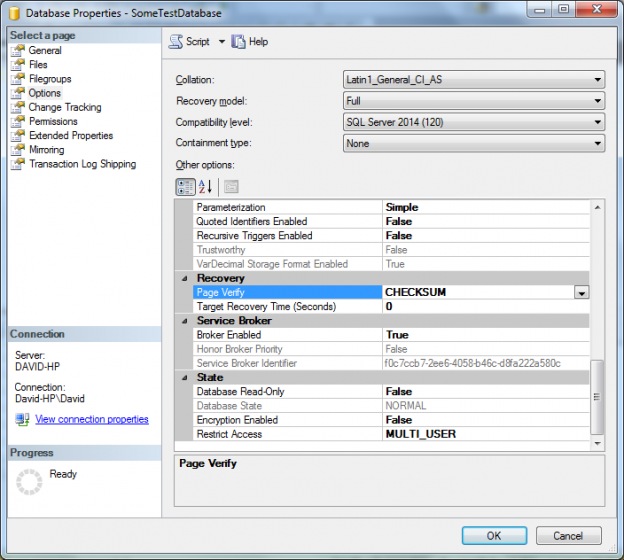

We can also view the database options under its properties to see what option is enabled:

In most cases we’ll notice that the Page Verify option is set to CHECKSUM and that’s because nowadays this is the default option for all databases. For Pre-SQL 2005 versions this was not the case, so occasionally when looking into database instances that have been migrated from earlier versions we might see one of the other Page Verify options selected; they are TORN PAGE DETECTION or NONE.

Having no page verification is a risky idea; the whole purpose of the Page Verify option is to provide a crucial check between when a page is written to disk and when it is read again to ensure its consistency, this gives an indicator to possible corruption issues at the IO subsystem so it is clearly a vital option to check and be aware of.

The TORN PAGE DETECTION option operates in a similar way to CHECKSUM. When a page is written the first 2 bytes of each 512 byte sector is stored in the page header and when the page is read back again SQL Server makes a comparison between the stored information and the sector bytes to detect any discrepancy and return an error if the comparison fails. The CHECKSUM option however bases its verification on a value calculated by using the entire page making the comparison between operations a much more thorough and effective option for page verification. In fact Books Online makes its recommendation very clear:

Best Practices Recommendations

Set the PAGE_VERIFY database option to CHECKSUM.

So using a test database let’s have a look at the CHECKSUM option in action. I have a very small table consisting of three rows and a clustered index on the ID field and a non-clustered index on the LastName field.

As I have already written the data to disk I am going to use the undocumented DBCC WRITEPAGE command to force an alteration to one of the pages making it “inconsistent” and hopefully picked up by our CHECKSUM operation.

Before I can do that I need to use another undocumented command DBCC IND to return the relevant Page ID’s for my non-clustered index as I want to ensure I am forcing an edit on the correct type of object. I need the relevant index ID so I will use the following query to return the specific ID for my non-clustered index:

|

1 2 3 |

SELECT * FROM sys.indexes WHERE name like 'NonClus%' |

Which returns:

Now I can use this ID with the DBCC IND command to find it’s corresponding pages. In this instance I am looking for rows with a PageType of 2, this being an index page.

On the bottom row I can see the PagePID value of 166 and I can now pass this into the DBCC WRITEPAGE command to force an alteration; it uses the following syntax:

DBCC WRITEPAGE ({dbname | dbid}, fileid, pageid, offset, length, data , directORbufferpool)

The final option directORbufferpool will be set to 1, this tells SQL to not write the change to the buffer pool first but write straight to disk. For this test we need to do this because making the change to the buffer pool will also create a new checksum making the change perfectly valid, which we don’t want to do. By issuing the command when the database is in single user mode I can create the page inconsistency scenario that we’re looking to test:

|

1 2 3 4 5 6 7 |

ALTER DATABASE SomeTestDatabase SET SINGLE_USER WITH ROLLBACK IMMEDIATE DBCC WRITEPAGE('SomeTestDatabase', 1, 166, 60, 1, 0x00, 1) ALTER DATABASE SomeTestDatabase SET MULTI_USER |

Bringing the database back into multi user mode doesn’t return any errors, this is expected as the checksum operation will only occur when the page is read so let’s try a simple SELECT statement:

|

1 2 3 4 |

SELECT * FROM [SomeTestDatabase].[dbo].[tblTestingTable] |

In this case the query has worked perfectly fine, the rows have been returned and the query results have been displayed.

This is because we “corrupted” the non-clustered index and as the clustered index represents the data itself we are not actually using the non-clustered index for this particular query, we can view the execution plan to confirm this:

In order to use the non-clustered index we will add a hint to our SELECT query to effectively force SQL Server to use the non-clustered index (Index ID = 2) that we have modified with the DBCC WRITEPAGE command.

|

1 2 3 4 5 |

SELECT * FROM [SomeTestDatabase].[dbo].[tblTestingTable] WITH (INDEX(2)) |

Msg 824, Level 24, State 2, Line 2

SQL Server detected a logical consistency-based I/O error: incorrect checksum (expected: 0x9d3ce900; actual: 0x9d3ce950). It occurred during a read of page (1:166) in database ID 6 at offset 0x0000000014c000 in file

Now SQL Server has notified us of an incorrect checksum in the read operation of page 1:166 and the generated error message actually shows us the checksum value that the SELECT expected and what was returned.

We can also see at the very beginning of the message that the error number is 824. This error, along with 823 and 825 specifically highlight potential corruption issues and we can trap and perform a notification of these error types using native functionality supplied by SQL Server Agent alerts.

|

1 2 3 4 5 6 7 8 9 10 11 |

EXEC msdb.dbo.sp_add_alert @name=N'Error 824 - Error on SQL Server Page Read', @message_id=824, @severity=0, @enabled=1, @delay_between_responses=0, @include_event_description_in=0, @category_name=N'[Uncategorized]', @job_id=N'00000000-0000-0000-0000-000000000000' GO |

Here’s an example of creating an alert to capture Error 824 and we can then add a response to the alert to send an email to a group address warning us of any potential errors. This is highly recommended as these particular errors could indicate potential errors in the IO subsystem that require urgent attention.

In addition to the CHECKSUM error being reported from a query we can run a database level consistency check and see the error being returned as well:

Msg 8939, Level 16, State 98, Line 1

Table error: Object ID 245575913, index ID 2, partition ID 72057594040877056, alloc unit ID 72057594046119936 (type In-row data), page (1:166). Test (IS_OFF (BUF_IOERR, pBUF->bstat)) failed. Values are 133129 and -4.

Msg 8928, Level 16, State 1, Line 1

Object ID 245575913, index ID 2, partition ID 72057594040877056, alloc unit ID 72057594046119936 (type In-row data): Page (1:166) could not be processed. See other errors for details.

Msg 8980, Level 16, State 1, Line 1

Table error: Object ID 245575913, index ID 2, partition ID 72057594040877056, alloc unit ID 72057594046119936 (type In-row data). Index node page (0:0), slot 0 refers to child page (1:166) and previous child (0:0), but they were not encountered.

CHECKDB found 0 allocation errors and 3 consistency errors in table ‘tblTestingTable’ (object ID 245575913).

CHECKDB found 0 allocation errors and 3 consistency errors in database ‘SomeTestDatabase’.

repair_allow_data_loss is the minimum repair level for the errors found by DBCC CHECKDB (SomeTestDatabase).

As we know the modification was made in the non-clustered index the resolution is fairly straightforward as we just need to drop and recreate the index.

|

1 2 3 4 5 6 7 8 9 10 11 |

DROP INDEX [NonClusteredIndex-20170313-175804] ON [dbo].[tblTestingTable] GO CREATE NONCLUSTERED INDEX [NonClusteredIndex-20170313-175804] ON [dbo].[tblTestingTable] ( [LastName] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) GO |

We can see how important it is to have the CHECKSUM option enabled for page verification, apart from a small amount of overhead there really is no downside to using it and even then the overhead is insignificant when compared to the type of errors it is checking for.

When changing a database to use CHECKSUM it is important to know that the option isn’t used for pages that have already been written to disk and as such it will only be applied to new pages are written or when existing pages are modified and re-written to the IO subsystem.

A common misconception regarding the CHECKSUM option is that it replaces the need for consistency checking databases. This is a very incorrect assumption; as we’ve seen the CHECKSUM error is only reported on the read operation of an inconsistent page, if the page isn’t read the error isn’t reported. However the biggest reason is that the DBCC CHECKDB routine performs a lot more thorough checking at the database level and includes checks that a CHECKSUM simply doesn’t cover, therefore the best practice is to combine CHECKSUM page verification and regular consistency checks.

- Monitoring SQL Server with Dynamic Management Objects – Requests - May 17, 2017

- Monitoring SQL Server with Dynamic Management Objects – Sessions and connections - May 12, 2017

- CHECKSUM page verification in SQL Server - March 21, 2017