This article provides a quick overview of Azure Machine Learning, an end-to-end cloud framework that can help you build, manage and deploy up to thousands of machine learning models. We provide a quick tutorial that shows how to take your existing, pre-trained machine learning models and migrate them into the Azure ML framework.

What is Azure Machine Learning?

Azure Machine Learning (also known as Azure ML) is a cloud-based machine learning operations platform for all types of machine learning operations, from classic machine learning algorithms to neural networks, including unsupervised and supervised learning, as well as reinforcement learning.

Azure ML offers two main ways to build and operate machine learning algorithms:

- Integrating the SDK and writing code in Python or R

- Using a no-code drag-and-drop designer

Azure ML provides the following features:

- Azure Machine Learning Designer—drag and drop modules to create machine learning experiments and deploy them to production

- Jupyter Notebooks—use example Jupyter Notebooks provided by Azure or create your own using the Python SDK

- R scripts—write R code using the, or leverage R modules in the ML Designer

- Many Models Solution Accelerator—allows you to train and operate up to thousands of machine learning models

- Visual Studio code extension—lets you work with machine learning models natively in your Visual Studio IDE environment

- Machine learning CLI—lets you work with machine learning models using the command line or automate models with scripts

- Support for open-source framework—integrates with TensorFlow, PyTorch, and Scikit-Learn

- Reinforcement learning—support for Ray RLlib

- Machine learning workflows—you can use MLflow to track deployment patterns and values, or use Kubeflow to build a complete workflow

Azure Machine Learning Designer

How Azure Machine Learning works

Azure Machine Learning uses a few key concepts to operationalize ML models: workspaces, datasets, datastores, models, and deployments. Below we describe each of these elements.

Workspaces

A machine learning workspace is a central, shareable location where you perform:

- Resource management for model training and deployment, including compute resources

- Management of assets created as you create and run machine learning models

This workspace contains all other Azure resources used by your machine learning models:

- Azure Container Registry (ACR) — stores Docker container images used during model training and deployment

- Azure Storage Account — the primary data storage location for the workspace, including training datasets and Jupyter notebooks. Based on Azure Storage services

- Azure Key Vault — stores sensitive data required by the workspace, including secrets

- Azure Application Insights — enables monitoring and analysis of model execution and performance

The workspace is a top-level resource that includes all other elements you create—datasets, datastores, and models.

Datasets and Datastores

Azure Machine Learning datasets let you access and interact with your data. A dataset is a virtual entity that contains a reference to a data source, as well as a copy of the source’s metadata. The data stays in place, so there is no additional storage cost and no risk to data integrity.

Credentials required to access the data, such as Azure subscription and token authorization, are stored separately in the workspace Key Vault.

Models

A model is code that takes inputs, applies a machine learning algorithm, and produces an output. Creating a model in Azure ML involves:

- Selecting algorithms

- Providing them with data

- Tuning hyperparameters

- Performing multiple iterations of training, resulting in a trained model

Models trained outside of Azure ML, using popular ML frameworks such as PyTorch, Scikit-learn, TensorFlow, or XGBoost, can be imported into the workspace and registered there. You can train an existing model by submitting it to a compute target in Azure.

Deployments

A registered model can be deployed to production as a service endpoint. Here’s what you need:

- Environment — includes all dependencies needed to run your model

- Scoring code — a script that accepts inputs, scores them using the model, and then returns model results

- Inference configuration — references the environment and scoring code, and other components required for running the model as a service

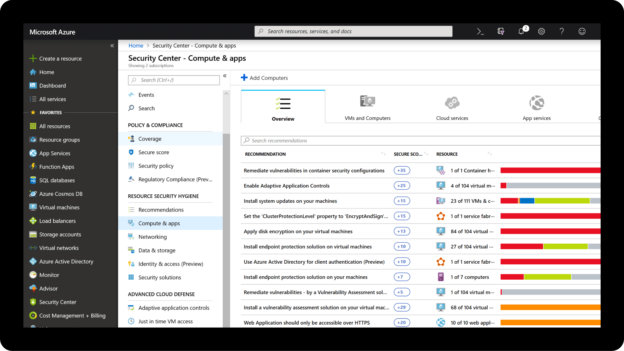

Security Dashboard of Currently-Deployed Machine Learning Models

Quick Tutorial: How to migrate existing Machine Learning Models to Azure ML

In this quick tutorial, you will learn to register and deploy machine learning models that were trained outside of Azure ML. You can implement the models either as web services or as IoT Edge devices. Once implemented, Azure can monitor model execution and detect data drift. The code is based on the Azure documentation.

Prerequisites

- Create an Azure ML workspace

- Integrate the Azure ML SDK for Python

- Install the extension for Azure CLI and Machine Learning CLI

- Obtain a trained model and then save it in the file system on your development machine

Register the Model

Azure ML lets you monitor and manage the metadata of a registered model together with other models managed in your workspace.

Let’s assume your existing model directory contains the following files:

Model.h5

Model.w2v

Encoder.pkl

tokenizer.pkl

The following CLI example demonstrates how to retrieve a file from the model directory and then send it to a new Azure ML model called sentiment:

az ml model register -p ./models -n sentiment -w myworkspace -g myresourcegroup

Define Inference Configuration

The inference configuration specifies an environment and scoring script that enables the deployment of your model. The example below demonstrates how you can use the SDK to achieve this:

# Creates an environment and adds Conda dependencies

myenv = Environment(name=”myenv”)

conda_dep = CondaDependencies()

# Defines the packages the model and scripts need to function

conda_dep.add_conda_package(“tensorflow”)

conda_dep.add_conda_package(“numpy”)

conda_dep.add_conda_package(“scikit-learn”)

conda_dep.add_pip_package(“keras”)

conda_dep.add_pip_package(“gensim”)

# You have to list azureml-defaults as a pip dependency

conda_dep.add_pip_package(“azureml-defaults”)

# Add Conda dependencies to myenv

myenv.python.conda_dependencies=conda_dep

# Define the inference config with your environment and the scoring script, score.py

inference_config = InferenceConfig(entry_script=”score.py”,

environment=myenv)

Scoring Script (score.py)

The scoring script, basically an entry script for your model, has only two basic functions:

- init()

- run(data)

These are used to initialize service when the model is started, as well as run the model on data provided by the client. The other parts of the script take care of loading and running the model.

There is no universal script for all models. You must create a script that specifies how the model loads, what kind of data it expects, and how the model is used to evaluate data.

Define Deployment

Azure provides a Webservice package for deploying the model as a service. The class name determines where the model should be implemented. For example, to deploy the service using Azure Kubernetes Service (AKS), create a deployment configuration using the function: AksWebService.deploy_configuration()

You can also deploy locally, but first, ensure Docker is installed on your local machine.

Deploy the Model

Finally, we will take the sentiment model defined earlier, and implement it in service, as follows:

from azureml.core.model import Model

model = Model(ws, name=’sentiment’)

# defining the service that will run the sentiment model, passing inference configuration and deployment configuration

service = Model.deploy(ws, ‘myservice’, [model], inference_config, deployment_config)

# place the model in standby to client requests

service.wait_for_deployment(True)

print(service.state)

print(“scoring URI: ” + service.scoring_uri)

To deploy the model from the CLI, directly providing interference configuration and deployment configuration using JSON files:

az ml model deploy -n myservice -m sentiment:1 –ic inferenceConfig.json –dc deploymentConfig.json

Conclusion

We covered the basic concepts of Azure Machine Learning: workspaces, datasets, datastores, models, and deployments, and showed how to take an existing machine learning model and register it in an Azure ML workspace. This allows you to take an existing model trained using a popular framework like Scikit-Learn or Pytorch, and operationalize it using the advanced features provided by Azure Machine Learning. The end result is that your machine learning can be exposed as a web service, and is ready for use in production by applications and end-users.

- Getting started with MySQL on AWS: Costs, Setup, and Deployment options - November 11, 2022

- SQL Server Performance Tuning made simple - April 19, 2021

- How to migrate to Azure ML: A quick start guide - February 5, 2021