Introduction

In a previous article “SQL Server Audit feature – discovery and architecture“, we’ve seen the basics to build an auditing system based on SQL Server Audit feature. In the introduction, we talked a little bit about the « General Data Protection Regulation » a.k.a. GDPR that allows personal data circulation (in Europe) that is a subject in the mouth of a lot of IT professionals.

GDPR makes audit a requirement and that’s a sufficient reason to take a look at different solution to achieve the same goal. For each one, we can pinpoint advantages and disadvantages of adopting it and eventually take a final decision to define which the «best» tool is.

In this article, we will take a close look at three ways to achieve the following goal: audit all DDL activity. We will review implementation of this goal using:

- Home-made DDL Triggers,

- SQL Trace

- SQL Server Audits (which are built on top of Extended Events)

I recommend users interested in security and particularly auditing to read Minette Steynberg’s article on SQLShack.com entitled “Creating a successful auditing strategy for your SQL Server databases“.

As you could expect, in the following sections, we will then implement the example of auditing DDL activity at the server and database levels in three manners using DDL Triggers, default SQL Trace mining and finally SQL Server Audits. This will allow us to enlist pros and cons to the adoption of each of these three methods, at least in my point of view. Once we are done with the example, we could conclude on the best choice from my own perspective.

A concrete example: auditing DDL events

In my opinion, there is no fixed rule that should apply once for all, for every company, for every application or software. It’s actually the responsibility of the audit implementer to choose the appropriate solution for a given situation. After all, the only question his manager will ask him is to know whether there is or not an audit on a particular aspect of SQL Server.

But, there are so many elements that can influence this implementation:

- Degree of knowledge and experience of the audit implementer. We tend to choose the solution that is familiar.

- Application environment or context. For instance: SQL Server version and edition or available disk space that we can dedicate to auditing.

- Business requirements and needs for auditing. Sometimes, they are not justified, but have to be implemented, sometimes it’s the contrary like enabling C2 auditing for non-C2 certified environments.

- Available resources: number of persons to assign, time, efforts, budget…

But this is not the subject here, so to convince you that « All Roads Lead to Rome », let’s implement DDL Auditing using three different techniques (included SQL Audits). We will then review them and try to find advantages to use SQL Audits in comparison to the two other techniques.

Note

Alternatively, we can use ApexSQL Log to perform DDL auditing.

Auditing DDL Events with triggers

As we have seen in the presentation of the architecture of SQL Audits, there are mainly two kinds of DDL events: those which are database-related and those which are server-related. It’s the same for DDL triggers! So we will have a server ddl trigger and multiple database ddl triggers.

As most of us already know, DDL Triggers are programmable objects in SQL Server. It means we can do more than just auditing with them. On technet, you will read the following list of use cases that DDL triggers are fit to accomplish:

DDL triggers can be used for administrative tasks such as auditing and regulating database operations.

Use DDL triggers when you want to do the following:

- You want to prevent certain changes to your database schema.

- You want something to occur in the database in response to a change in your database schema.

- You want to record changes or events in the database schema.

Each DDL trigger implied in the audit should perform as follows:

So, one who wants to audit DDL events with this solution must define which information he wants to collect and where he wants to store it. For demonstration purpose, we will keep it simple and say that we will collect the following information and store it to tables:

- The moment when the event occurred

- The login name

- The client computer name and application

- The current database name of the session

- The kind of event that occurred

- The information about the object (SchemaName, ObjectName) – We could also include a « SubObjectName » information in the list in order to handle, for example, events related to table partitionning management

- The T-SQL statement that fired the trigger

- Optionally, we could also keep the complete event descriptor which is of XML data type.

In order to get this information, we will use the EVENTDATA built-in function which returns an XML describing the event that has just occurred. The returned value of this function is the optional information listed above. As we get an XML data type, we can query it using XQuery.

You will find below two examples of what we get back using this function. The first one is extracted from a server-level DDL event and the next one from a database-level DDL event.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

<EVENT_INSTANCE> <EventType>ALTER_VIEW</EventType> <PostTime>2015-08-19T14:51:45.500</PostTime> <SPID>60</SPID> <ServerName>TestServer</ServerName> <LoginName>MGSBUSINESS\Jefferson </LoginName> <UserName>dbo</UserName> <DatabaseName>DbaTools</DatabaseName> <SchemaName>maintenance</SchemaName> <ObjectName>CleanupSettings</ObjectName> <ObjectType>VIEW</ObjectType> <TSQLCommand> <SetOptions ANSI_NULLS="ON" ANSI_NULL_DEFAULT="ON" ANSI_PADDING="ON" QUOTED_IDENTIFIER="ON" ENCRYPTED="FALSE" /> <CommandText> ALTER VIEW [maintenance].[CleanupSettings] AS SELECT DbName, ObjectOwner, ObjectName, ObjectType, CleanupEnabled, CleanupColumn, CleanupType, CleanupParam1, CleanupParam2, CleanupParam3, CleanupParam4 FROM maintenance.MaintenanceSettings ; </CommandText> </TSQLCommand> </EVENT_INSTANCE> |

As we can see, we don’t get exactly the same structure. This means there are two different Xml Schema Definitions and we can specialize our change logs.

The following table structure should be defined in order to store data about DDL events:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

CREATE TABLE [auditlog].[ChangeLog]( [ChangeLogID] [int] IDENTITY(1,1) NOT NULL, [CreateDate] [datetime] NULL, [LoginName] [sysname] NULL, [ComputerName] [sysname] NULL, [ProgramName] [nvarchar](255) NULL, [DBName] [sysname] NOT NULL, [SQLEvent] [sysname] NOT NULL, [SchemaName] [sysname] NULL, [ObjectName] [sysname] NULL, [SQLCmd] [nvarchar](max) NULL, [XmlEvent] [xml] NOT NULL, CONSTRAINT [PK_ChangeLog] PRIMARY KEY CLUSTERED ( [ChangeLogID] ASC ) ) ; |

And the following trigger code should be defined:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

BEGIN DECLARE @EventDataXml XML; DECLARE @SchemaName SYSNAME; DECLARE @ObjectName SYSNAME; DECLARE @EventType SYSNAME; -- getting back event data SET @EventDataXml = EVENTDATA(); SELECT @EventType = @EventDataXml.value(''(/EVENT_INSTANCE/EventType)[1]'', ''SYSNAME'') @SchemaName = @EventDataXml.value(''(/EVENT_INSTANCE/SchemaName)[1]'', ''SYSNAME'') @ObjectName = @EventDataXml.value(''(/EVENT_INSTANCE/ObjectName)[1]'', ''SYSNAME'') ; INSERT [auditlog].[ChangeLog] ( [CreateDate],[LoginName], [ComputerName],[ProgramName],[DBName],[SQLEvent], [SchemaName], [ObjectName], [SQLCmd], [XmlEvent] ) SELECT GETDATE(), SUSER_NAME(), HOST_NAME(), PROGRAM_NAME(), @EventDataXml.value(''(/EVENT_INSTANCE/DatabaseName)[1]'', ''SYSNAME''), @EventType, @SchemaName, @ObjectName, @EventDataXml.value(''(/EVENT_INSTANCE/TSQLCommand)[1]'', ''NVARCHAR(MAX)''), @EventDataXml ; END; |

As explained above, there are DDL events on server scope and on database scope and it’s not possible to create a single trigger that will do the job. Actually, you will need to create previous table and trigger multiple times:

-

A server DDL change log table and a server DDL trigger using the following CREATE statement:

123456CREATE TRIGGER [Server_DDL_Audit] ON ALL SERVERFOR DDL_SERVER_LEVEL_EVENTSAS…

-

A database DDL change log table and a database DDL trigger per database as all database users can be assigned a single database. The trigger will be created as follows:

123456CREATE TRIGGER [Database_DDL_Audit] ON DATABASEFOR DDL_DATABASE_LEVEL_EVENTSAS…

Note:

- You can create a central table to store DDL events data for all audited databases, but you will need to create a database trigger in every database you want to audit. Plus, you will eventually need to review login mappings and user permissions as well.

-

If you want to implement this solution, don’t forget to adjust table names so that it’s like for instance:

- ServerChangeLog for server-related DDL events

- DatabaseChangeLog for database-related DDL events

You should also adjust the insert statement in triggers so that it uses those names.

We’ve seen the first method. Let’s review some its advantages and disadvantages.

| Advantages | Disadvantages |

Flexibility and « storage-friendly »

|

Additional management tasks

|

We could modify this trigger-based approach to output to a file, but it would require either to assign advanced permission or to be enabled xp_cmdshell and it’s not necessarily the best approach…

Let’s now do the same using default SQL Trace.

Auditing DDL events using default trace

The SQL Server default trace is an old functionality (since at least SQL Server 2005) that provides the ability to track some key events, primarily related to the configuration options. This feature is considered depreciated and should not be used in new developments. We should use Extended Events instead.

The default trace tracks DDL Changes, password changes, server configuration and database settings changes or file growth…

It’s enabled when default trace enabled Server Configuration Option is set to 1. So, when implementing auditing using default trace, we must ensure that this setting is always up and running.

Another point that has to be mentioned is that the output of this feature is limited to a set of 5 files of maximum 20 MB which are rolled over. This means that to keep a suitable history, we must take a copy of trace files as regularly as possible.

This means that we do not need a lot of work to audit DDL events. We “just” need to:

- Enable default trace.

- Create a scheduled task that will ensure the functionality is still on and adjust when it’s not the case.

- Build and regularly schedule a script that will copy audit files.

Until now, we’ve just talked about writing and storing default trace files. Let’s now talk about how to read from a trace file.

Reading from a trace file requires the use of fn_trace_gettable built-in function:

|

1 2 3 4 5 6 7 |

USE AdventureWorks2012; GO SELECT * FROM fn_trace_gettable('c:\temp\mytrace.trc', default); GO |

For default trace, the following query should fit the basic need to know that « something happened »:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

SELECT TE.name AS [EventName], TT.DatabaseName , TT.DatabaseID , TT.ApplicationName , TT.LoginName , TT.Duration , TT.StartTime , TT.EndTime FROM sys.fn_trace_gettable( CONVERT(VARCHAR(4000), ( SELECT TOP 1 f.[value] FROM sys.fn_trace_getinfo(NULL) f WHERE f.property = 2 )), DEFAULT ) TT JOIN sys.trace_events TE ON TT.EventClass = TE.trace_event_id ORDER BY TT.StartTime desc ; |

This will give us the following kind of results:

As we did for DDL Trigger auditing, let’s review some advantages and disadvantages of auditing using default trace.

| Advantages | Disadvantages |

« User-friendly »

|

Additional management tasks

|

Auditing DDL events using SQL Audits

Reminder

SQL Server Audits fundamentals have been introduced in my previous article. For readers who don’t have time to read this full article, let’s review a summary of its contents (it’s actually taken out of this article).

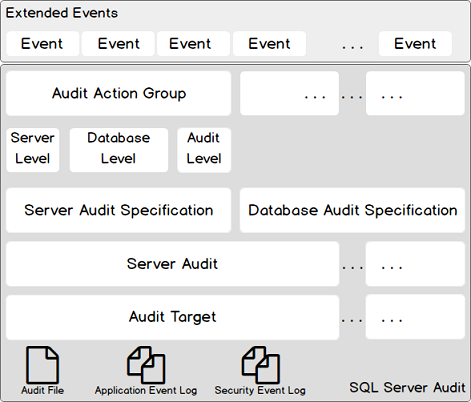

SQL Server Audit takes advantage of the Extended Events feature and uses events groups as input. We also refer to these groups as Audit Action groups. Audit action groups relate to a « depth level » in SQL Server: either they are server-, or database- or audit- related. Audit action groups are mapped to a server audit specification or a database audit specification, primarily based on our audit policy.

All these components together form what we call a server audit. The product of an audit must be stored somewhere. SQL Server audit has some flexibility for it and provides three different kinds of output, which are known as audit targets. Audit target is either a file on the server host, the application log or the security log.

You will find below a diagram that summarizes the architecture of SQL Server Audits.

Back to the example

We will first create our audit object. It will be called Audit-Demo-DDL. Here is the T-SQL statement to do so. Alternately, you can use SSMS.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

CREATE SERVER AUDIT [Audit-Demo-DDL] TO FILE ( FILEPATH = N'C:\Windows\Temp' ,MAXSIZE = 64 MB ,MAX_ROLLOVER_FILES = 2147483647 ,RESERVE_DISK_SPACE = OFF ) WITH ( QUEUE_DELAY = 1000 ,ON_FAILURE = CONTINUE ) |

This object is created with an OFF status. It should not appear in the list returned by the following query:

|

1 2 3 |

select * from sys.dm_server_audit_status |

Now, let’s create the server audit specification using the following statement:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

CREATE SERVER AUDIT SPECIFICATION [Spec-Demo-DDL] FOR SERVER AUDIT [Audit-Demo-DDL] ADD (DATABASE_CHANGE_GROUP), -- database is created, altered, or dropped ADD (DATABASE_OBJECT_CHANGE_GROUP), -- CREATE, ALTER, or DROP statement is executed on database objects, such as schemas ADD (DATABASE_OBJECT_OWNERSHIP_CHANGE_GROUP), -- ADD (DATABASE_OBJECT_PERMISSION_CHANGE_GROUP), -- a GRANT, REVOKE, or DENY has been issued for database objects, such as assemblies and schemas ADD (DATABASE_OWNERSHIP_CHANGE_GROUP), -- use of ALTER AUTHORIZATION statement to change the owner of a database, and the permissions that are required to do that are checked ADD (DATABASE_PERMISSION_CHANGE_GROUP), -- GRANT, REVOKE, or DENY is issued for a statement permission by any principal in SQL Server ADD (DATABASE_PRINCIPAL_CHANGE_GROUP), -- raised when principals, such as users, are created, altered, or dropped from a database. ADD (DATABASE_ROLE_MEMBER_CHANGE_GROUP), -- a login is added to or removed from a database role. This event class is raised for the sp_addrolemember, sp_changegroup, and sp_droprolemember stored procedures ADD (LOGIN_CHANGE_PASSWORD_GROUP), -- a login password is changed by way of ALTER LOGIN statement or sp_password stored procedure ADD (SERVER_OBJECT_CHANGE_GROUP), -- CREATE, ALTER, or DROP operations on server objects ADD (SERVER_OBJECT_OWNERSHIP_CHANGE_GROUP), -- owner is changed for objects in the server scope. ADD (SERVER_OBJECT_PERMISSION_CHANGE_GROUP), -- GRANT, REVOKE, or DENY is issued for a server object permission by any principal in SQL Server ADD (SERVER_PERMISSION_CHANGE_GROUP), -- GRANT, REVOKE, or DENY is issued for permissions in the server scope, such as creating a login. ADD (SERVER_PRINCIPAL_CHANGE_GROUP), -- server principals are created, altered, or dropped. -- a principal issues the sp_defaultdb or sp_defaultlanguage stored procedures or ALTER LOGIN statements -- sp_addlogin and sp_droplogin stored procedures. -- sp_grantlogin or sp_revokelogin stored procedures ADD (SERVER_ROLE_MEMBER_CHANGE_GROUP) -- a login is added or removed from a fixed server role. This event is raised for the sp_addsrvrolemember and sp_dropsrvrolemember stored procedures. WITH (STATE=ON) |

Now, we can enable the server audit:

|

1 2 3 |

ALTER SERVER AUDIT [Audit-Demo-DDL] WITH (STATE=ON) ; |

Now, let’s try it out! You will find below a little script which creates a table, adds a primary key constraint, inserts some data and drops the table.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

use testJEL; CREATE TABLE dbo.TestAuditSettings ( IdCol BIGINT IDENTITY(1,1) NOT NULL, ValCol VARCHAR(256) ); alter table dbo.TestAuditSettings add CONSTRAINT PK_TestAuditSettings PRIMARY KEY CLUSTERED ( IdCol ) ; insert into dbo.TestAuditSettings ( ValCol ) select * FROM ( VALUES ('First'), ('Second'), ('Third') ) as vals ( cols ) select * from dbo.TestAuditSettings DROP TABLE dbo.TestAuditSettings USE [master] GO CREATE LOGIN [TestAudit] WITH PASSWORD=N'5rKenvzzgIlynLjdI4krhuO7kXT1tiEjXFZWlVVJoi4=', DEFAULT_DATABASE=[master], DEFAULT_LANGUAGE=[us_english], CHECK_EXPIRATION=OFF, CHECK_POLICY=OFF ALTER LOGIN [TestAudit] DISABLE DROP LOGIN [TestAudit] ; GO |

I said previously that the sys. server_audit_status was a particularly useful table. Here is the first usage of this great view: getting the location of the current server audit file. This is performed with the following query:

|

1 2 3 4 5 6 |

select status_desc, audit_file_path From sys.dm_server_audit_status where name = 'Audit-Demo-DDL' |

As I get back the audit_file_path, I am able to read this file and get the list of actions that have been audited so far using sys.fn_get_audit_file function.

We can by the way put the value of the audit_file_path column into a variable. So the query to read current audit file looks like this:

|

1 2 3 4 5 6 7 8 9 10 |

DECLARE @AuditFilePath VARCHAR(8000); select @AuditFilePath = audit_file_path From sys.dm_server_audit_status where name = 'Audit-Demo-DDL' select * from sys.fn_get_audit_file(@AuditFilePath,default,default) |

And here is what I get back when I run this statement:

Apparently, the ALTER TABLE and DROP TABLE statement have not been tracked… We should maybe add the SCHEMA_OBJECT_CHANGE_GROUP action group or create a database audit specification if this option is available…

Anyway, this shows us we must really take care of the action groups we add to a server audit and also test that this audit does the job we think it should do.

Let’s clean up our stuff. You will find below the statements to drop the objects we created in this section.

|

1 2 3 4 5 6 7 |

use master; alter server audit specification [Spec-Demo-DDL] with (state = off); drop server audit specification [Spec-Demo-DDL] alter server audit [Audit-Demo-DDL] with (state = off) drop server audit [Audit-Demo-DDL] |

As for previous ways to implement a DDL audit, let’s review some advantages and disadvantages of using SQL Server Audits

| Advantages | Disadvantages |

« User-friendly »

|

Additional management tasks

|

Conclusion

As a conclusion, we can say that SQL Server Audit is a great feature delivered starting with Standard Edition that is scalable to ensure a professional enterprise-level auditing of server activity. Its implementation is easy, but needs additional tasks to be performed to ensure the kind of zero audit data loss.

While it’s not perfect, SQL Server Audit is, to me, the best feature available out of the box to implement security auditing in SQL Server and go a step forwards to « GDPR compliance ».

Previous article in this series:

Resources

- SQL Server Audit (Database Engine)

- SQL Server Audit Action Groups and Actions

- Understanding DDL Triggers

- EVENTDATA (Transact-SQL)

- SQL Server Extended Events Targets

- How to perform a performance test against a SQL Server instance - September 14, 2018

- Concurrency problems – theory and experimentation in SQL Server - July 24, 2018

- How to link two SQL Server instances with Kerberos - July 5, 2018