Introduction

Intended audience

This document is intended for application developers and database administrators who plan to evaluate performance for any computer hosting a SQL Server instance.

Context

Let’s say a new physical server has been delivered and we have to test its performance in regards to its future destination, which is a database server. For newbies, there are a lot of questions that emerge:

- What has to be tested?

- What solutions can be used?

- What information do they provide?

- How to use them?

- Is there a tool that does all the tests I need to get a good insight into server performance or is it better to use two or more tools in conjunction?

- …

Most questions out there should lead us to following steps:

- Definition of the scope of the test

- Analysis and selection of the tools available to achieve test purposes

- If not already done, creation of a performance report template

- Usage of this report template to build actual performance tests report for the server

- Actual performance test with results documentation in report

- Analysis of performance test results (compared to a baseline)

- Feedback on report template for continuous improvement

Example of performance testing tools

There is a bunch of tools that we can use to do a performance test:

-

General purpose testing:

- PassMark PerformanceTest

- CPU-z

- CrystalDisk Mark

- Phoronix Test Suite

- …

-

Database-related testing:

- Sqlio.exe

- HammerDb

- OStress

- …

In conjunction with these tools, it’s worth using a database monitoring tool so that we can collect and historize performance statistics. Here are some of the tools that can be used:

- sp_BlitzFirst®

- Windows Performance Monitor (perfmon)

- Spotlight

- Idera Diagnostic Manager

- …

Here are the choices made for the purpose of this article:

- PassMark PerformanceTest to get a first overview of overall server performance

- Benchmarking with HammerDb and collecting performance statistics with sp_BlitzFirst®.

Getting server performance overview with PassMark PerformanceTest

The latest version for this tool is the 9th and can be downloaded for testing (and purchase) on its dedicated web page.

The installation is pretty straightforward, so it won’t be reviewed here.

Once PerformanceTest is installed, we can launch it. We will get following window.

As we can see, there are multiple options available. We will just click on “Run Benchmark” to run all the tests. We are prompted following message to which we can answer “Yes”.

And the benchmark begins…

Once the tests are done, you will get a score that you can compare to others or between computers in order to evaluate performance.

Note

In this example, the 3D Graphics Mark is grayed because I did the test on a virtual machine without 3D acceleration.

If we get to detailed test data, we can see that there is a preamble testing for database operations:

Performance testing with HammerDb

HammerDb Installation Process

Go to https://www.hammerdb.com/download.html to get back the latest version of HammerDB.

The installation process is straightforward and won’t be discussed.

SQL Server Performance statistics collection

Collection stored procedure

While HammerDb is running, we will collect performance statistics using the sp_BlitzFirst® stored procedure from the open source project called SQL Server First Responder Kit.

So, the first step is to get back latest version of this stored procedure and set this up to the target SQL Server instance we will be testing.

Preferably, this procedure should be installed in a database reserved to DBA usage, let’s call it [DBAMGMT].

We can check the stored procedure actually works with following statement:

|

1 2 3 4 5 6 7 8 9 |

EXEC dbo.sp_BlitzFirst @Seconds = 5, @OutputDatabaseName = 'DBAMGMT', @OutputSchemaName = 'Monitoring', @OutputTableName = 'BlitzFirstResults', @OutputTableNameFileStats = 'BlitzFirstResults_FileStats', @OutputTableNamePerfmonStats = 'BlitzFirstResults_PerfmonStats', @OutputTableNameWaitStats = 'BlitzFirstResults_WaitStats' ; |

Automating collection with a SQL Agent Job

This statement will be used to define a SQL Server Agent Job that will run every 10 seconds during the whole performance test.

Here is the code to create such a job:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 |

USE [msdb] GO /****** Object: Job [BlitzFirst - Collection] Script Date: 17-04-18 14:37:26 ******/ IF EXISTS (SELECT job_id FROM msdb.dbo.sysjobs_view WHERE name = N'BlitzFirst - Collection') EXEC msdb.dbo.sp_delete_job @job_name = 'BlitzFirst - Collection', @delete_unused_schedule=1 GO /****** Object: Job [BlitzFirst - Collection] Script Date: 17-04-18 14:37:26 ******/ BEGIN TRANSACTION DECLARE @ReturnCode INT SELECT @ReturnCode = 0 /****** Object: JobCategory [[Uncategorized (Local)]] Script Date: 17-04-18 14:37:26 ******/ IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'[Uncategorized (Local)]' AND category_class=1) BEGIN EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB', @type=N'LOCAL', @name=N'[Uncategorized (Local)]' IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback END DECLARE @jobId BINARY(16) select @jobId = job_id from msdb.dbo.sysjobs where (name = N'BlitzFirst - Collection') if (@jobId is NULL) BEGIN EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'BlitzFirst - Collection', @enabled=0, @notify_level_eventlog=2, @notify_level_email=2, @notify_level_netsend=0, @notify_level_page=0, @delete_level=0, @description=N'No description available.', @category_name=N'[Uncategorized (Local)]', @owner_login_name=N'sa', @notify_email_operator_name=N'The DBA Team', @job_id = @jobId OUTPUT IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback END /****** Object: Step [Run Sp_BlitzFirst with statistics collection] Script Date: 17-04-18 14:37:26 ******/ IF NOT EXISTS (SELECT * FROM msdb.dbo.sysjobsteps WHERE job_id = @jobId and step_id = 1) EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Run Sp_BlitzFirst with statistics collection', @step_id=1, @cmdexec_success_code=0, @on_success_action=1, @on_success_step_id=0, @on_fail_action=2, @on_fail_step_id=0, @retry_attempts=0, @retry_interval=0, @os_run_priority=0, @subsystem=N'TSQL', @command=N'EXEC Monitoring.sp_BlitzFirst @Seconds = 5, @OutputDatabaseName = ''DBAMGMT'', @OutputSchemaName = ''dbo'', @OutputTableName = ''BlitzFirstResults'', @OutputTableNameFileStats = ''BlitzFirstResults_FileStats'', @OutputTableNamePerfmonStats = ''BlitzFirstResults_PerfmonStats'', @OutputTableNameWaitStats = ''BlitzFirstResults_WaitStats'' ; ', @database_name=N'DBAMGMT', @flags=4 IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id = 1 IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule @job_id=@jobId, @name=N'sc', @enabled=1, @freq_type=4, @freq_interval=1, @freq_subday_type=2, @freq_subday_interval=10, @freq_relative_interval=0, @freq_recurrence_factor=0, @active_start_date=20180416, @active_end_date=99991231, @active_start_time=0, @active_end_time=235959, @schedule_uid=N'624dd28c-8faf-4979-88a4-947977e9a834' IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name = N'(local)' IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback COMMIT TRANSACTION GOTO EndSave QuitWithRollback: IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION EndSave: GO |

Note

Previous script will drop an existing SQL Agent Job. It will also create the job disabled. It will be up to the tester to enable and disable the job for his tests.

One needs to set the database names in red according to its desire.

Some useful queries

You will find below some useful queries against collection tables that will allow the generation of report charts. Parts in red have to be changed before execution.

An excel worksheet model is available to generate charts for some statistics collected during benchmark test. Its name is “MDL-0020 – sp_BlitzFirst graphs”.

Page Life Expectancy

|

1 2 3 4 5 |

select CheckDate,cntr_value as PageLifeExpectancy from dbo.BlitzFirstResults_PerfmonStats where counter_name ='Page life expectancy' AND CheckDate BETWEEN '2018-04-16 13:10:00' AND '2018-04-16 13:33:00' order by ID desc |

Batch requests / sec

|

1 2 3 4 5 6 7 8 9 |

select CheckDate, REPLACE( CONVERT(VARCHAR(100),value_per_second),'.',',' ) as BatchRequestsPerSec from dbo.BlitzFirstResults_PerfmonStats where counter_name ='Batch Requests/sec' AND CheckDate BETWEEN '2018-04-16 13:10:00' AND '2018-04-16 13:33:00' order by ID desc |

Server wait total

|

1 2 3 4 5 6 |

select CheckDate,value_delta as AverageWaitTimeMs from dbo.BlitzFirstResults_PerfmonStats where counter_name ='Average Wait Time (ms)' and instance_name = '_Total' AND CheckDate BETWEEN '2018-04-16 13:10:00' AND '2018-04-16 13:33:00' |

Server wait by category

|

1 2 3 4 5 6 |

select CheckDate,instance_name,value_delta as AverageWaitTimeMs from dbo.BlitzFirstResults_PerfmonStats where counter_name ='Average Wait Time (ms)' and instance_name <> '_Total' AND CheckDate BETWEEN '2018-04-16 13:10:00' AND '2018-04-16 13:33:00' |

Test preparation

The HammerDb tools will create a database which will contain the schema and database objects used for benchmarking. This schema represents the activity of a firm with one or more warehouses.

If it’s the first time we run this tool against SQL Server, we ought to create some test databases with different numbers of warehouses.

Steps to create a test database

Let’s first review how to create a test database to use as a target for HammerDb.

To do so, we will first step create an empty database (with HammerDb) called [TPCC_x_WH] where x represents the number of warehouses we will create. The approximate (but unconfirmed) database size computation formula is 100Mb per warehouse.

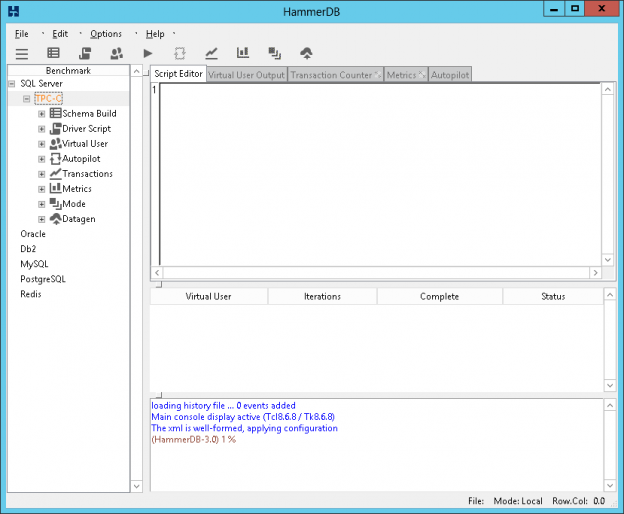

Let’s start HammerDb.

We will double-click on SQL Server and select TPCC benchmarking option (for an OLTP simulation) then click on the “OK” button.

We will get a confirmation message:

And the display is now for SQL Server. We can expand the “SQL Server > TPC-C “ tree node, go down to “Schema Build” and double click on Options

We will be prompted a dialog in which we can set the actual target computer and databases so as the credentials to be used for testing.

Here is an example of one warehouse:

Once you hit “OK”, double-click on the “Build” leaf. A confirmation will be asked.

Once, we clicked on the “Yes” button, we will have to wait for HammerDb to complete the creation of its testing schema:

Once the creation is complete, you will see following message (green box).

Hit the red stop button to stop the thread used to create the database.

Now the database is ready and as we won’t stop at this point for performance testing, it’s worth taking a backup of this database.

Databases to create for benchmark

Four databases should be created and backed up on the lowest version of SQL Server with following a number of warehouses.

Number of warehouses | Experienced size on disk | Experienced backup size (compressed) |

1 | 150 Mb | 53 Mb |

5 | 416 Mb | 238 Mb |

100 | 8100 Mb | 4.5 Gb |

500 | 39.75 Gb | 22.6 Gb |

Note

It’s strongly recommended to take backups of these databases as the creation process is really time-consuming.

Test Definition

Here are the steps to follow to fire a test against a given [TPCC_x_DW] database. We will assume you let HammerDb open.

Test case script generation

Expand “Driver Script” node and double-click on the “Options” leaf.

Actions to be done:

- Edit server, database and credential settings

- Select “Timed Test Driver Script”

- Keep the default ramp-up settings.

- Adjust the test duration to 10 minutes

- Keep “Use All warehouses” box unchecked

- Check “Time Profile” (Have a look at this documentation page for further details)

- Click on the “OK” button

The settings dialog should look as follows after step 6:

Note:

We could also check the “Checkpoint when complete” option if we use the AutoPilot mode that we will discuss in following subsection.

Now the options are set, we can generate the script that will be used by HammerDb. This is done by clicking on the “Load” leaf of tree hierarchy in the left panel:

Virtual users creation

Now it’s time to tell HammerDb how many users will be using the database defined in “Driver Script” options.

Virtual users refer to threads that will be created by HammerDb during the test. Their number can be configured in the “Virtual Users” node.

Most of the time, we will fire more than one test where only the number of virtual users vary. There are two ways to make it. The first one is to perform as many tests as virtual users count variations. The other one is to use the “Autopilot” mode of HammerDb.

To enable the Autopilot mode, we will expand the “Autopilot” node and double-click on “Options”. This opens up a dialog in which we will select the “Autopilot enabled”:

As we have a ramp-up of 2 minutes and test duration set to 10 minutes, we will set minutes per test value to 15 to be sure everything is ok.

We will also replace the default virtual user sequence by following:

3 5 9 17 25 37 49 65 97 129 197 257

Note

Feel free to create your own virtual user’s number sequence based on the requirements for the application that will be using SQL Server.

For instance, an alternative could be:

3 11 101 201 501 1001 1501 2001

Anyway, with the first sequence, the dialog will look like this:

We can click on the “OK” button to close the dialog.

Before digging into the execution of AutoPilot mode, there is a step not to forget: the activation of the performance statistics collection job.

This can be done with following T-SQL statement:

|

1 2 3 4 |

EXEC msdb.dbo.sp_update_job @job_name = N'BlitzFirst - Collection', @enabled = 1 ; GO |

Test execution

Now, everything is ready and we can double-click on the Autopilot button:

It will open an “Autopilot” tab in the central pane:

As soon as a test is finished, there will be a “TEST RESULT” line showing the observed number of transactions per minute and the observed number of order created per minute.

We could keep track of these two numbers associated with the number of configured virtual users in order to create charts like this one:

You could also create charts with SQL Server statistics like “Page Life Expectancy” or “Average Wait Time (ms)”. As we can find the time window during which a test occurred, it’s pretty easy to restrict data sets to just this time window.

- How to perform a performance test against a SQL Server instance - September 14, 2018

- Concurrency problems – theory and experimentation in SQL Server - July 24, 2018

- How to link two SQL Server instances with Kerberos - July 5, 2018