There are many ways to process your SSAS Tabular Model. This can be achieved in SSIS using the Analysis Services Execute DDL Task or manually, through Management studio GUI but to have a little fun & make the task more flexible I’m going to script this with ASSL/TMSL & build a notification round it. We can then schedule this as a step in a SQL agent job, call it from SSIS or PowerShell.

The easiest way to get started was for me to choose the Process Database option in SSMS and once the options are set, choosing to script to a new Query Window. This gives us a quick script to work with without the hassle of typing it out myself. I can then adjust or add to it as needed.

The Importance of Compatibility Level

This was the XMLA generated for processing a tabular model called Customer Accounts which sits on a SQL Server 2016 SSAS installation I have been playing with. One thing to note here is that the Compatibility Level for this DB is set to SQL Server 2012 SP1 or later (1103).

|

1 2 3 4 5 6 7 8 |

<Process xmlns="http://schemas.microsoft.com/analysisservices/2003/engine"> <Type>ProcessDefault</Type> <Object> <DatabaseID>Customer Accounts</DatabaseID> </Object> </Process> |

According to Microsoft the language this is using is Analysis Services Scripting Language (ASSL for XMLA). The importance of your database’s compatibility level & keeping it consistent is that this script will not work if you execute it against a tabular model with a Compatibility Level of 1200. XMLA is no longer used for tabular models as Microsoft changed the scripting language. SQL Server 2016 now uses TMSL for scripting Tabular model databases. Here’s excerpts from the MSDN page that clarifies the change:

“Tabular Model Scripting Language (TMSL) is the command and object model definition syntax for tabular databases at compatibility level 1200, created for the SQL Server 2016 version of Analysis Services. TMSL communicates to Analysis Services through the XMLA protocol, where the XMLA.Execute method accepts both JSON-based statement scripts in TMSL as well as the traditional XML-based scripts in Analysis Services Scripting Language (ASSL for XMLA).”

ASSL is not ideal for Tabular models, but designing and implementing a more semanticlly correct scripting language requuired deep changes across the spectrum of the Analysis Services component architecture. Changes of this magnitude can only be made in major version releases, and only when all components are impacted by this change can be updated in tandem.

The main point to note with this change is that we didn’t see a transition version (with support for both ASSL & TMSL). It’s a straight cut. “1103” databases won’t be able to use TMSL and “1200” won’t be able to use XMLA. Don’t Panic! There is a workaround however, that will help you move a script between compatibility levels. (I’ll go into that later). The headache is, if you have any tabular models you are thinking about moving up to Compatibility Level 1200, check the scripts you run against them as they will ALL need to be recreated using TMSL!

You may have also noticed, that even though we are using a “1200” database & we’ve generated a TMSL script, that SSAS is still using an XMLA window in Management Studio. This is because SSAS still uses the XMLA protocol which will accept both JSON and ASSL. The XMLA protocol accepts both but SSAS does not, which makes transition to the higher, 1200 compatibility level, far from smooth.

Long live TMSL!

Using the same settings in the Process Database wizard against a “1200 Tabular DB” generates the following script:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

{ "refresh": { "type": "automatic", "objects": [ { "database": "Customer Accounts" } ] } } |

So, using this as our starting point, we can flesh the script out a bit.

- We can add further metadata to the script using the description definition.

- If you wanted to only process a table that can be defined using the table parameter.

- All options are defined in the Microsoft reference page for the Refresh command:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

{ "refresh": { "description": "This is where I explain what this script does", "type": "automatic", "objects": [ { "database": "Customer Accounts" "table": "Date" } ] } } |

Executing TMSL with SQL Agent

Now that we have a script to work with, let’s create the job to surround it.

-

Create a SQL Agent Job on a DB engine & name the job appropriately.

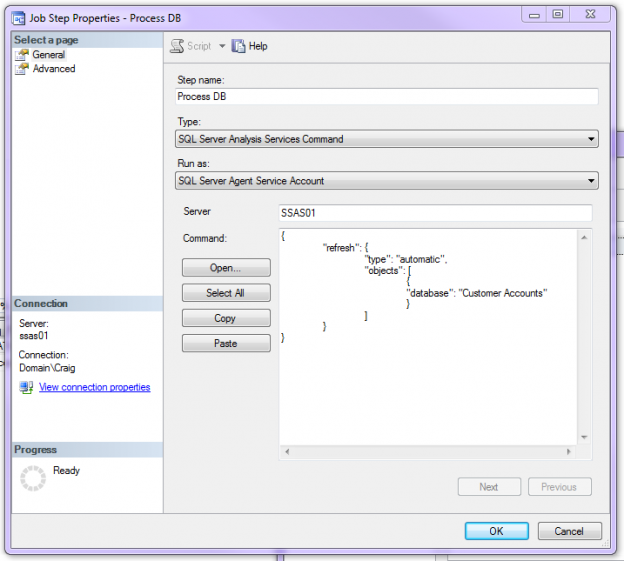

Create a new step with the following settings:

- Type: SQL Server Analysis Services Command

- Run As: SQL Server Agent Service Account

- Server: SSAS01

-

Paste the finished TMSL script into the Command window, name the step appropriately & click OK.

Jumping aside for a minute, I mentioned at the start that there is a workaround to get TMSL to execute in an 1103 or 1100 compatibility level database. This is how we can get round that.

As TMSL is only supported in SQL Server 2016 you will not be able to set this SQL Agent job up as shown above. To do this we can wrap the JSON in XMLA which can be handled by a SQL 2012 -2014 Agent job. Here’s an example:

1234567891011121314<Statement xmlns="urn:schemas-microsoft-com:xml-analysis">{"refresh": {"type": "automatic","objects": [{"database": "Customer Accounts"}]}}</Statement>With our Process DB step setup, we now want to look at logging & notification of success.

We want to provide information in an email format that will help admins or users know the database has been processed. We can query the SSAS engine for the last processed date of the database. This will have to be an MDX query so I’ll need to get the SQL Agent step to store the output. The final “email” step can then pick that up & send the email.

Here’s how…

Create a new step with the following settings:

- Type: SQL Server Analysis Services Query

- Run As: SQL Server Agent Service Account

- Server: SSAS01

- Database: Customer Accounts (drop down)

-

Now we want to paste this query into the Command window. This returns the name of the DB & its last processed Date. (You can test it out in an MDX query window on the SSAS instance)

123456789SELECT[CATALOG_NAME],[DATE_MODIFIED]FROM$SYSTEM.DBSCHEMA_CATALOGSWHERECATALOG_NAME = 'Customer Accounts'

-

Now Click Advanced & check the Log to Table checkbox.

This logs the job step output (Our DB & last processed date) to the [msdb].[dbo].[sysjobstepslogs] table. We’ll then add logic to the email step to find & return these values.

For the final step I’ve put together some SQL to query the above table and send it as an HTML email.

Feel free to pad this out with more options. I.e.

- Check if the cube has processed “today” first & send a different email.

- Highlight the datetime if the refresh took longer than XX mins (assuming you know the start time)

Create a new step with the following settings:

- Type: Transact-SQL script (T-SQL)

- Run As: SQL Server Agent Service Account

- Database: msdb

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 |

USE msdb GO BEGIN DECLARE @EmailRecipient NVARCHAR(1000) DECLARE @SubjectText NVARCHAR(1000) DECLARE @ProfileName NVARCHAR(1000) DECLARE @tableHTML1 NVARCHAR(MAX) DECLARE @tableHTMLAll NVARCHAR(MAX) DECLARE @startDate SMALLDATETIME DECLARE @stopDate SMALLDATETIME DECLARE @timeSpanText VARCHAR(100) SET QUOTED_IDENTIFIER ON SET NOCOUNT ON SELECT @EmailRecipient = 'craig@craigporteous.com' SET @SubjectText = 'SSAS201 Process Database has Completed' SELECT TOP 1 @ProfileName = [Name] FROM msdb.dbo.sysmail_profile WHERE [Name] = 'Alert-BI-Admins' SET @tableHTML1 = N'<H3 style="color:#642891; font-family:verdana">SSAS Tabular Processing</H3>' + N'<p align="left" style="font-family:verdana; font-size:8pt"></p>' + N'<table border="3" style="font-size:8pt; font-family:verdana; text-align:left">' + N'<tr style="color:#42426F; font-weight:bold"><th>Tabluar Database Name</th><th>Date Last Processed</th>' + CAST(( SELECT td = CAST([log] as xml).value('(//*[local-name()="CATALOG_NAME"])[1]', 'nvarchar(max)'), '', td = CAST(CONVERT(datetimeoffset, CAST([log] as xml).value('(//*[local-name()="DATE_MODIFIED"])[1]', 'nvarchar(max)'),127) AS DATETIME) FROM [msdb].[dbo].[sysjobstepslogs] WHERE step_uid IN (SELECT js.step_uid FROM [msdb].[dbo].[sysjobsteps] js LEFT JOIN [msdb].[dbo].[sysjobs] j ON j.job_id = js.job_id WHERE j.name = 'Process Customer Accounts Tabular Model' --This is the name of the SQL Agent Job & is used to uniquely identify the step log we want AND js.step_id = 2) --This is the MDX step number in our SQL Agent Job. Change this if you add other steps. FOR XML PATH('tr'), TYPE) AS NVARCHAR(MAX)) + N'</table>' --Set Table variable to a zero length string if it’s null SET @tableHTMLAll = ISNULL (@tableHTML1,'') -- Check Table variable is greater than a zero length string IF @tableHTMLAll <> '' BEGIN SELECT @tableHTMLAll EXEC msdb.dbo.sp_send_dbmail @profile_name = @ProfileName, @recipients = @EmailRecipient, @body = @tableHTMLAll, @body_format = 'HTML', @subject = @SubjectText END SET NOCOUNT OFF END |

You can setup a schedule for this but I’ve just finished here to test the script.

The output of this script should look something like the HTML output below, which was generated by the SELECT @tableHTMLAll line above, just before the send mail command, in case you wanted to check the output yourself.

This is quite a robust way to automate the processing of your tabular model but bear in mind that errors generated during processing aren’t captured & will just spit out as a failed Agent job. You may also need to split up your processing into multiple tasks in the job, depending on the size of your model & the version of SQL Server you use. (Tabular models are limited to using 16GB in Standard edition!). Please let me know if anyone finds a way of capturing processing errors or a better way to do what I’ve described. This was a learning process for me and I hope it will help others out.

References

- Tabular Model Scripting Language (TMSL) Reference

- Analysis Services Scripting Language (ASSL for XMLA)

- Refresh command (TMSL)

- How to secure Reporting Services with Group Managed Service Accounts (GMSA) - November 7, 2018

- Contribute, contribute, contribute! - June 20, 2018

- Top 10 things you must document in SQL Server Reporting Services (SSRS) - February 26, 2018